Table of Contents

Home / Blog / Artificial Intelligence

What is Parameter-Efficient Fine-tuning (PEFT)?

August 26, 2024

August 26, 2024

Machine learning has seen a revolution with the introduction of Parameter-Efficient Fine-Tuning (PEFT), especially for large-scale models. The demand for more effective and scalable solutions has increased as the field of artificial intelligence (AI) develops. In order to meet these goals, parameter-efficient fine tuning minimizes computational expenses, promotes more effective model fine-tuning, and maximizes the use of already developed models.

This article explores the concept of what is PEFT, explains how it differs from conventional fine-tuning techniques, and explains why it has great potential for machine learning in the future.

Unlock the Power of PEFT for Your AI Models!

Discover how Parameter-Efficient Fine-Tuning (PEFT) can revolutionize your machine learning projects. Learn more in our comprehensive guide and take your AI models to the next level.

Understanding Fine-Tuning in Machine Learning

It is important to understand the idea of fine-tuning in general before going into parameter-efficient fine-tuning. In machine learning, fine-tuning is a method for adjusting a previously trained model to a new task or dataset. In order to better suit the demands of the new task, the model’s parameters—which were initially set during pre-training on a sizable dataset—must be adjusted.

How Does Fine-Tuning Work?

The fine-tuning process begins with a pre-trained model that has already learned general features from a huge dataset. The goal of retraining the model on a smaller, task-specific dataset is to optimize its parameters such that it performs better on the new job. This usually entails using gradient descent and backpropagation to modify the model’s weights, biases, and other parameters.

Take into account, for instance, a model that has been pre-trained using a sizable corpus of textual data. Grammar, syntax, and popular phrases are among the general language elements that this model has acquired. This model could become more adept at comprehending and producing content in the medical field by being fine-tuned on a smaller dataset pertaining to medical terms.

Fine-Tuning Methods

Fine-tuning methods come in a variety of forms, each with pros and drawbacks. The most popular technique is full fine-tuning, in which every parameter of the model is adjusted while it is being trained. Although very efficient, this method can be computationally costly, particularly for big models with millions or billions of parameters.

Another technique is layer-wise fine-tuning, in which the model is changed selectively on specific layers. This method lowers the cost of computing but can produce a less specialized model. Another thing to think about is embeddings vs. fine-tuning. Embeddings are pre-trained representations of data (word vectors, for example) that may be fed into a model, but fine-tuning modifies the parameters of the entire model.

Few-Shot Learning vs. Fine-Tuning

When it comes to training models for novel tasks with little data, few-shot learning and fine-tuning are frequently contrasted. With just a few instances, a few-shot learning model is trained to perform effectively on a new task; meta-learning techniques are frequently used to help the model generalize from prior tasks. A crucial distinction between few-shot learning vs. fine-tuning is highlighted: few-shot learning seeks to attain good performance with less data, whereas fine-tuning usually requires a higher amount of task-specific data.

What is PEFT?

Some of the drawbacks of conventional fine-tuning techniques are addressed by the novel approach known as parameter-efficient fine-tuning (PEFT). During the fine-tuning phase, PEFT concentrates on changing a subset of the model’s parameters rather than all of them. This improves computational efficiency and lowers the possibility of overfitting, which happens when a model is overly tailored to a particular job.

The PEFT Model

When using a PEFT model, the majority of the pre-trained model’s parameters are usually frozen, and just a tiny portion are updated. These criteria are frequently selected according to how significant or pertinent they are to the new assignment. In doing so, the model more effectively adjusts to the new task while retaining the broad information it acquired during pre-training.

Because of its efficiency, the PEFT model is especially useful for large-scale models, such those used in computer vision, natural language processing (NLP), and other fields where it can be very costly to train models from scratch. Additionally, because PEFT requires less computing power, models can be adjusted more quickly, facilitating experimentation and iteration at a faster pace.

PEFT vs. Traditional Fine-Tuning

The primary distinction between fine-tuning vs. parameter-efficient fine-tuning is the number of parameters that are changed. All of the model’s parameters must be updated during traditional fine-tuning, which can result in expensive computing expenses and drawn-out training periods. Comparatively, PEFT streamlines the process by concentrating on updating a limited number of parameters.

The contrast between parameter-efficient fine-tuning and fine-tuning also reveals variations in the adaptability and performance of the model. Conventional fine-tuning has a larger risk of overfitting even if it can produce highly specialized models. Conversely, PEFT finds a compromise between minimizing computational overhead and keeping the general knowledge of the pre-trained model while adapting to a new job.

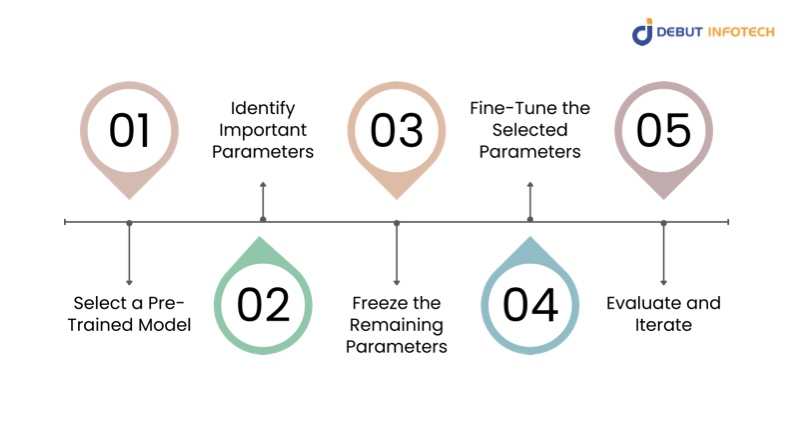

How to Build a PEFT Model

Building a PEFT model involves several steps:

- Select a Pre-Trained Model: Use a model that has already been pre-trained using a sizable dataset pertinent to your field. This model ought to have picked up general characteristics that it may have tailored to your particular work.

- Identify Important Parameters: Choose the pre-trained model’s parameters that are most pertinent to the current task. Many methods, including sensitivity analysis and trimming, can be used to accomplish this.

- Freeze the Remaining Parameters: Deactivate any parameters that don’t directly pertain to the recently assigned task. This lowers computing expenses by preventing them from being updated throughout the fine-tuning procedure.

- Fine-Tune the Selected Parameters: Adjust the subset of parameters that were determined to be significant. Retraining the model on the task-specific dataset with only the chosen parameters altered is what this step entails.

- Evaluate and Iterate: Analyze the model’s performance using a validation dataset once it has been adjusted. To enhance performance, repeat the fine-tuning procedure after making any necessary adjustments to the parameter selection.

PEFT Library

The PEFT library is a set of resources and tools intended to make parameter-efficient fine-tuning implementation easier. This library contains pre-built functions for effectively fine-tuning models, freezing unnecessary parameters, and choosing crucial parameters.

By streamlining the process of creating and optimizing models with the PEFT library, developers may cut down on the time and effort needed to put PEFT techniques into practice. Additionally, the library supports a number of machine learning frameworks, which broadens its user base.

Embeddings vs Fine-Tuning in PEFT

The decision between fine-tuning and embeddings becomes more important when it comes to parameter-efficient fine-tuning. Embeddings are learned representations of data, like feature vectors in computer vision or word vectors in natural language processing. These embeddings can be used as model input since they capture broad patterns.

Embeddings can provide the model’s knowledge base when utilizing PEFT, allowing the fine-tuning procedure to concentrate on adjusting a limited number of parameters. In comparison, thorough fine-tuning involves retraining the entire model, which frequently calls for additional processing power.

An effective method for avoiding the risk of overfitting and cutting down on computational expenses is to combine embeddings with PEFT to adapt models to new tasks.

Applications of PEFT

Parameter-efficient fine-tuning has a wide range of applications across various domains, including:

1. Natural Language Processing (NLP)

When it comes to NLP, PEFT is especially useful for modifying big language models for particular applications like machine translation, named entity identification, and sentiment analysis. PEFT allows for efficient adaptation while maintaining the pre-trained model’s general language understanding by fine-tuning only a subset of parameters.

2. Computer Vision

PEFT is a tool in computer vision that helps modify previously trained models for application in new tasks like facial recognition, picture segmentation, and object detection. With this method, models may be deployed effectively on edge devices with constrained computational resources.

3. Autonomous Systems

PEFT makes it possible for autonomous systems, such drones or self-driving automobiles, to efficiently adjust their models to new tasks or surroundings. For real-time applications, when computing performance is crucial, this is especially crucial.

4. Healthcare

PEFT can be applied in the healthcare industry to optimize models for certain medical activities, such forecasting patient outcomes based on electronic health data or identifying diseases from medical imaging. The quick implementation of AI models in clinical contexts is made possible by this method.

Future Directions and Challenges

Even while parameter-efficient fine-tuning has many benefits, there are still issues to be resolved and research opportunities to be explored. Among the crucial topics for additional research are the following:

1. Automated Parameter Selection

Finding the right settings to fine-tune is one of the difficulties with PEFT. By creating automated techniques for parameter selection, PEFT might become more widely used and require less manual interaction.

2. Transferability Across Domains

PEFT models’ applicability may be increased by investigating their transferability across domains. For instance, with only minor adjustments, a model optimized for one NLP job might be transferred to another.

3. Integration with Other Methods

PEFT integration with other methods, such few-shot learning or meta-learning, may improve the fine-tuning process’ efficacy and efficiency. This may result in extremely adaptive and computationally efficient models.

4. Ethical Considerations

When using PEFT models, ethical issues need to be taken into account, just like with any other AI technique. Making sure the models are impartial, fair, and free from damaging prejudices and stereotypes is part of this.

Leveraging PEFT in Machine Learning Development Services

The implementation of parameter-efficient fine-tuning approaches can yield significant benefits for machine learning development services. Machine learning consulting companies can maximize model performance and minimize computational expenses and resource usage by integrating PEFT into their process. This effectiveness not only speeds up the development process but also improves the machine learning solutions’ scalability and adaptability to a wide range of industries.

Enhancing Model Performance with PEFT

Machine learning development services that integrate parameter-efficient fine-tuning provide more accurate and focused model modifications. This is especially helpful for sectors where computational resources are few or for scenarios requiring the deployment of models on devices with less processing capacity, like edge computing environments.

Additionally, PEFT can be used by machine learning consulting companies to provide their clients specialized solutions. Consultants can provide efficient and highly specialized models that match the specific demands of each customer without incurring the costs associated with complete model retraining by fine-tuning only the most pertinent parameters.

Custom AI Models with PEFT

The capacity of PEFT to build custom AI models suited to particular activities or domains is one of its key benefits. With PEFT, large levels of customization are achievable without the high computational costs usually associated with model training—for example, tailoring a language model to a given dialect or fine-tuning a vision model for a particular set of images.

AI solutions for clients can be more adaptable and responsive when machine learning consulting companies use PEFT. A client in the healthcare industry would need a model optimized for early disease diagnosis, whereas a client in the finance sector might need one that can reliably forecast stock market patterns. PEFT enables these businesses to efficiently satisfy such a wide range of requirements.

PEFT in Multimodal Models

The development of multimodal models is an intriguing application of parameter-efficient fine-tuning. These models can produce more thorough and precise insights by processing and integrating data from several sources (such as text, photos, and audio).

PEFT is very helpful for optimizing multimodal models because it enables the selective modification of parameters that are pertinent to each modality of data. This guarantees that the model can effectively combine and handle many kinds of data, which makes it perfect for sophisticated applications like improved medical diagnostics or autonomous systems.

Fine-Tuning Methods for Specific AI Applications

Various fine-tuning methods are needed for different AI applications. The effectiveness, versatility, and performance of the model can all be strongly impacted by the method selection. This is how PEFT stacks up in different AI applications:

Natural Language Processing (NLP)

NLP models such as GPT, BERT, and T5 have demonstrated remarkable adaptability in comprehending and producing human language. It can take a lot of resources to refine these models for particular applications, such as machine translation or sentiment analysis. By concentrating only on the most important characteristics, PEFT streamlines this process, increasing both the effectiveness and lower cost of fine-tuning.

Computer Vision

Computer vision models must be quite specialized for tasks like picture segmentation, object identification, and classification. By concentrating on the layers or characteristics that are most important for visual processing, PEFT makes it possible to fine-tune these models while using less time and resources and preserving excellent accuracy.

Generative AI Development

PEFT can be very helpful in generative AI development, where models generate new material (such as writing, graphics, or music). Using fewer resources, developers can get high-quality results by fine-tuning only specific features of the model. This is particularly helpful in the creative sectors, where creativity and quick iteration are essential.

AI and Data Management

For enterprises that depend on processing and analyzing vast amounts of data, effective AI and data management are essential. PEFT facilitates faster, more accurate insights, lessens the computational load on data management systems, and allows for more effective model fine-tuning.

Do You Require Professional Help to Adjust Your AI Models?

Partner with Debut Infotech to leverage PEFT to enhance your AI models for optimal performance through tailored solutions.

Conclusion

With its more effective and scalable method of model adaption, parameter-efficient fine-tuning (PEFT) is a noteworthy development in the field of machine learning. PEFT offers faster iteration and experimentation and lowers processing costs by just updating a subset of parameters during the fine-tuning process. This makes it the best option for creating custom AI models in a variety of fields, such as generative AI, computer vision, and natural language processing.

Moreover, PEFT’s integration with machine learning development services and machine learning algorithms enables businesses to provide their clients with more specialized, efficient, and affordable AI solutions. PEFT will become more crucial in facilitating the creation of sophisticated, scalable, and flexible AI models as the need for AI grows.

To sum up, parameter-efficient fine-tuning is a strategic technique that can improve the efficacy and efficiency of AI models in a variety of applications, not just a technical one. Whether you are working on generative AI applications, vision systems, or language models, PEFT provides an effective toolkit for optimizing performance while minimizing computing cost. Understanding methods like PEFT will be essential to staying on the cutting edge of machine learning advancement as AI continues to develop.

Frequently Asked Questions

Q. What is Parameter-Efficient Fine-Tuning (PEFT)?

Parameter-Efficient Fine-Tuning (PEFT) is a technique in machine learning that allows for the fine-tuning of only a subset of a pre-trained model’s parameters. This approach reduces computational costs and speeds up the fine-tuning process, making it more efficient while maintaining high model performance.

Q. How does fine-tuning differ from parameter-efficient fine-tuning?

Traditional fine-tuning involves adjusting all parameters of a pre-trained model for a specific task, whereas parameter-efficient fine-tuning (PEFT) focuses on updating only a selected subset of parameters. PEFT is more resource-efficient and faster, making it ideal for scenarios with limited computational power.

Q. What are the advantages of PEFT over traditional fine-tuning methods?

PEFT offers several advantages, including reduced computational requirements, faster fine-tuning, and the ability to achieve high performance with fewer resources. This makes it particularly beneficial for deploying models in resource-constrained environments or when rapid iteration is needed.

Q. How does PEFT relate to few-shot learning?

Both PEFT and few-shot learning aim to make models adaptable with minimal data. While few-shot learning focuses on training models with very few examples, PEFT fine-tunes models by adjusting only a small number of parameters, making them more efficient and specialized for specific tasks.

Q. Can PEFT be used with any machine learning model?

PEFT can be applied to a wide range of machine learning models, especially large pre-trained models in natural language processing, computer vision, and multimodal tasks. Its versatility makes it a valuable tool across various machine learning applications.

Q. What role does the PEFT library play in fine-tuning models?

The PEFT library provides tools and frameworks that simplify the implementation of parameter-efficient fine-tuning. It helps developers apply PEFT techniques to their models with ease, allowing for efficient model optimization and customization.

Q. Why is PEFT important for machine learning consulting companies?

PEFT enables machine learning consulting companies to offer more efficient and customized solutions to their clients. By reducing the resources needed for model fine-tuning, PEFT allows consultants to deliver high-performing AI models tailored to specific needs, even in resource-constrained environments.

Talk With Our Expert

Our Latest Insights

USA

Debut Infotech Global Services LLC

2102 Linden LN, Palatine, IL 60067

+1-708-515-4004

info@debutinfotech.com

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

info@debutinfotech.com

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-708-515-4004

info@debutinfotech.com

INDIA

Debut Infotech Pvt Ltd

Sector 101-A, Plot No: I-42, IT City Rd, JLPL Industrial Area, Mohali, PB 140306

9888402396

info@debutinfotech.com

Leave a Comment