Table of Contents

Home / Blog / Artificial Intelligence

Advanced Optimization Techniques for Generative AI Models

October 16, 2025

October 16, 2025

Here’s a controversial truth: in the race to adopt generative AI, most companies are failing because their models are weak—they’re failing because their models are wasteful. Bigger isn’t always better. In fact, pouring money into oversized, under-optimized systems is quietly draining budgets and stalling real business impact.

So, what’s the smarter play? Shifting the focus from raw scale to generative AI optimization—making models leaner, sharper, and more efficient for enterprise realities. That’s where real ROI lives, and it’s the difference between AI that dazzles in a demo versus AI that delivers in production.

In this article, we’ll unpack:

- The business case for optimization

- Core challenges in generative AI performance

- Seven advanced techniques to make models faster, cheaper, and smarter

- A forward-looking conclusion and FAQs

And yes, that’s exactly what we’ve got on offer today—no fluff, just optimization gold.

The Business Case for AI Optimization

So, your business has built a shiny new AI model. Congratulations, but that’s only just half the battle.

You must also be able to ensure that these models are optimized to operate at scale. This means it must be able to deliver consistent, accurate, and cost-effective results across critical workflows.

Here’s an example: imagine if your generative AI model indeed produces compelling AI outputs. However, the model also requires massive computing power or delivers results too slowly.

How do you think that would impact your ROI?

Well, certainly it’ll put a dent in your ROI.

In fact, without generative AI optimization, organizations face challenges such as:

- High cloud and infrastructure costs

- Slow customer interactions

- Inconsistent performances across use cases

And when your business encounters any of these challenges in today’s competitive business world, it can slowly impact your customer satisfaction and revenue growth metrics.

That’s where optimization comes into the picture!

Optimization transforms AI from an experimental tool into a dependable business enabler.

With the help of AI model training and optimization services, businesses can reduce operating costs, streamline deployments, and ensure that models are tailored to meet domain-specific requirements. All these things represent the differentiating factors between an AI that looks impressive in the demo stage and one that consistently drives measurable value in production.

However, cost savings are not the only reasons why you should optimize AI models. Other tangible reasons include:

- Greater scalability to support enterprise-wide adoption

- Higher reliability and accuracy in decision-making

- More efficient use of data and computing resources

- Smarter personalization for customers and end-users

For example, advanced optimization systems enable businesses to fine-tune generative models for specialized tasks, leading to faster insights, leaner operations, and sustainable growth.

As a result, the overarching business case for AI optimization is about strategic impact. It’s about making sure that your generative AI investments do not only keep pace with innovation but that those investments translate into sustainable advantages.

Whether it is higher productivity, better customer experiences, or faster time-to-market, optimizing AI models can help you achieve these goals, and that’s the business case for AI model optimization.

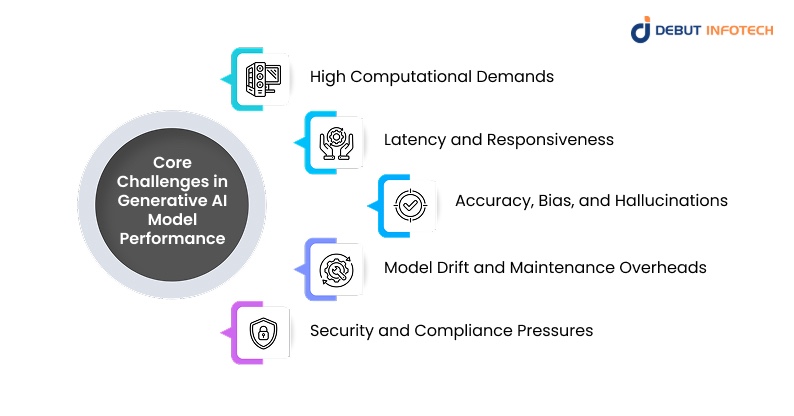

Core Challenges in Generative AI Model Performance

Apart from the fact that optimizing AI models increases the chances of getting a higher ROI, the need for this optimization also arises from the significant performance challenges that can stall adoption or inflate costs if left unaddressed.

Below, we have highlighted some of the most common ones:

1. High Computational Demands

It takes a massive amount of computing resources to train and run large AI models. This is because your business might need expensive cloud commitments and even specialized hardware. Without optimization, the cost curve can rise faster than the business benefits.

2. Latency and Responsiveness

There is a high chance that your AI model will run slowly if you don’t design it with performance optimization in mind. This especially applies to customer-facing applications like chatbots, recommendation engines, and virtual assistants. And the thing with slow response times is that it frustrates users and weakens brand perception. You don’t want that.

3. Accuracy, Bias, and Hallucinations

Generative AI models can hallucinate by producing factually incorrect outputs that can undermine your brand’s authenticity and credibility if left unchecked. Bias in training data can also creep into outputs, creating compliance risks and reputational concerns.

You can avoid these to a great extent through AI optimization.

4. Model Drift and Maintenance Overheads

The AI models you trained today can quickly become outdated tomorrow due to the constantly evolving business climate. That’s why you need to train and retrain your models continuously.

However, the problem with this is that constant training and retraining lead to operational complexity. In fact, many organizations often underestimate the ongoing maintenance required to keep generative AI models relevant.

5. Security and Compliance Pressures

Adoption of AI doesn’t occur in a vacuum. Strict data governance regulations apply to businesses in the healthcare, financial, and other regulated sectors. The optimization process becomes even more challenging when models must be maintained in performance while adhering to regulatory rules.

So, how do we overcome these challenges through AI optimization?

We discuss some advanced techniques for AI optimization in the next section.

Let’s check it out!

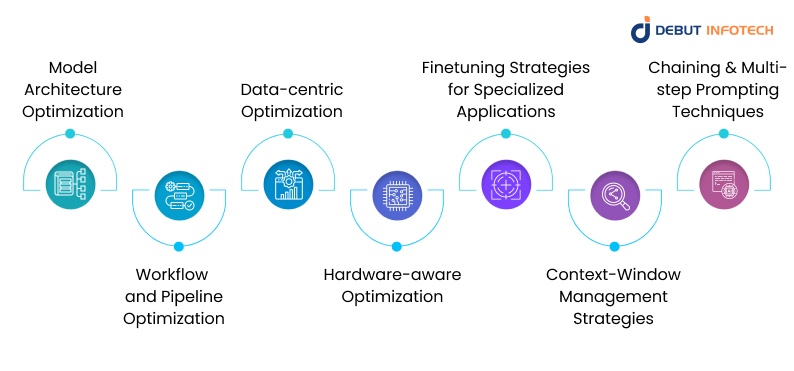

Top x Advanced Optimization Techniques for Generative AI Models

The following are some key advanced techniques for making AI models smarter, leaner, and more efficient for real-world use:

1. Model Architecture Optimization

One of the most direct ways to improve generative AI performance is by rethinking the structure of the model itself.

You see, although large, unoptimized designs might produce amazing results, speed, cost, and scalability are sometimes sacrificed in the process. Businesses can significantly reduce their resource requirements to achieve equal performance with generative AI optimization.

So, what does this entail?

The following are some of the most common architecture-level adjustments that can make your AI models perform better:

- Pruning: This process involves eliminating unnecessary parameters from the model without significantly compromising accuracy, thereby speeding up and reducing the inference cost.

- Quantization: This is the process of representing numbers with less accuracy in order to minimize computational overhead and memory consumption.

- Knowledge distillation: This is the process of teaching a smaller “student” model to behave similarly to a bigger, more intricate “teacher” model in order to extract a significant amount of power with fewer resources.

When your business leverages AI model training and optimization strategies to implement these strategies, it becomes easy to adopt lighter models. With these lighter models, everything runs faster on existing hardware, allowing you to reduce infrastructure costs and support more responsive customer-facing applications.

2. Workflow and Pipeline Optimization

As the name implies, you can also improve the performance of an AI model by making the surrounding workflow and pipeline more efficient. For instance, many organizations often struggle with delays in retraining, deployment bottlenecks, or model drift over time. So, if you’re able to clean up and optimize the pipelines, then you get to ensure the AI systems remain agile and reliable in production.

Wondering how to do these?

The following are some effective strategies:

- Automating retraining cycles to maintain model alignment with changing business data.

- Including monitoring systems that quickly identify abnormalities or performance declines.

- Using MLOps best practices to streamline deployment operations, guaranteeing quicker experiments before going live.

Beyond speed, workflow optimization lowers the hidden costs of generative AI model maintenance and lays the groundwork for scalable, enterprise-wide adoption.

3. Data-centric Optimization

You can also improve or optimize the performance of an AI model by improving the quality of its training datasets. When it comes to generative AI, the saying “garbage in, garbage out” holds true.

Therefore, poorly curated or biased datasets can cripple even the most advanced models. And on the other hand, optimizing at the data level often yields better results than simply scaling the model itself.

So, what are some data-centric and advanced optimization systems to put into consideration:

- Removing noise and improving data quality through data curation and cleaning

- Filling gaps in underrepresented areas using synthetic data generation

- Refining outputs against business objectives using Reinforcement Learning From Human Feedback (RLHF)

- Active learning loops where models prioritize data samples that will most improve accuracy.

4. Hardware-aware Optimization

Just as crucial as the models themselves is the generative AI infrastructure. Large models running on below-par technology can lead to wasteful spending and inefficiency. Therefore, with the help of hardware optimization, you can align the technology stack with your unique business requirements.

Some specific options for executing hardware optimization include:

- Selecting the best processors for workloads involving inference and training: GPUs vs. TPUs.

- Distributed training configurations: dividing tasks among several computers to increase processing speed.

- Purchasing specialized chips made especially for AI workloads. These are known as custom silicon (ASICs).

- Edge deployment: Using local devices to run lightweight models in order to lower bandwidth costs and latency.

Businesses may save money and scale AI systems more quickly and sustainably by matching model requirements with infrastructure strategy.

5. Finetuning Strategies for Specialized Applications

Finetuning strategies refer to the various techniques for adapting a general AI model for a specific, specialized purpose or application within your business setting. Most times, these generic models are often too broad for industry-specific tasks. However, fine-tuning them allows businesses to adapt pre-trained models for specialized use cases without having to start from scratch—saving both time and money.

You can fine-tune AI models using a number of approaches, some of which include the following:

- Transfer Learning: This means adapting an existing model to a specific domain with minimal retraining.

- Low-Rank Adaptation (LoRA): This is a cost-effective fine-tuning technique that involves adding only a small number of parameters.

- Continuous Learning Loops: Updating models incrementally as new business data becomes available.

Organizations can keep compute costs under control while achieving high accuracy for specialized applications, such as tailored healthcare recommendations or compliance checks in finance, through targeted fine-tuning.

Related Read: Tips for Choosing the Best Generative AI Company

6. Context-Window Management Strategies

An LLM’s context window refers to the amount of text (in tokens) that the model can consider (or remember) at any given time. Therefore, when the LLM has a larger context window, it means that it can process longer outputs and incorporate a greater amount of context into its output.

While LLMs rely heavily on these context windows for more accurate responses, extending them drastically increases computational costs.

So, what are some strategies for managing these context-windows?

- Retrieval-Augmented Generation (RAG): Using external knowledge bases to inject relevant information into the prompt instead of extending context length.

- Hybrid context handling: Balancing short-term and long-term memory to keep prompts lean without losing important context.

- Dynamic window sizing: Adjusting context length on demand based on task complexity.

For executives, this translates into lower operating expenses and faster inference times without sacrificing accuracy or depth of output.

7. Chaining and Multi-step Prompting Techniques

Sometimes, the key to better outputs isn’t a bigger model, but a smarter way of asking questions. By breaking down difficult tasks into smaller, sequential parts, chaining and multi-step prompting help models reason more efficiently and prevent mistakes.

Among the examples are:

- Prompt chaining: For more structured responses, prompt chaining involves feeding the output of one prompt as the input for the subsequent one.

- Decomposition techniques: Dividing a large query into manageable tasks.

- Stepwise Reasoning: Stepwise reasoning is the process of guiding the model through logical steps as opposed to requiring a single, comprehensive response.

Both increased precision and decreased computation effort are advantages. Through deliberate orchestration, firms might get more dependable outcomes rather than overloading the model with several urges.

Improve your Business ROI With Advanced Optimization Systems

Contact our AI model optimization experts to discover amazing business benefits of AI optimization.

Conclusion

We began with a hard truth: most companies don’t struggle with generative AI because the models aren’t powerful enough—they struggle because those models are wasteful. Bigger, slower, and costlier doesn’t translate into better business outcomes.

What does?

Smarter, leaner, and well-optimized systems.

What that tells us is that Generative AI Optimization is a serious business need for executives and decision makers who value ROIs. From model architecture tweaks like pruning and quantization, to smarter workflows, data-centric refinement, hardware-aware deployment, and fine-tuning for specialized applications, optimization unlocks efficiency and trust. Context-window management and multi-step prompting further prove that performance gains are more about strategy than about brute force.

So, what is the final takeaway here?

For decision-makers, the takeaway is simple: AI success isn’t measured by model size, but by measurable impact. That’s where Debut Infotech comes in. As a leading generative AI development company, we bring the expertise, tools, and AI model training and optimization services needed to turn generative AI into a true growth engine.

If you’re ready to move past flashy demos and into scalable, production-ready results, Debut Infotech is the partner to make it happen. Optimization isn’t the future—it’s the foundation. Let’s build it together.

Frequently Asked Questions (FAQs)

Q. Why is data cleanliness crucial in training Generative AI models?

Accuracy, bias reduction, and overall reliability of generative outputs are all enhanced by clean data, while inconsistencies, hallucinations, and increased operating expenses are caused by poor data quality. High-quality, carefully selected datasets often outperform models that are simply scaled up; therefore, data cleaning is the cornerstone of reliable, enterprise-level generative AI implementations.

Q. What primary feature differentiates generative AI from traditional AI models?

In contrast to traditional AI models, which utilize data analysis and prediction, generative AI takes a step further by leveraging learned patterns to generate new text, images, music, or designs. While this inventiveness opens up new corporate use cases, it also presents new optimization, accuracy, and ethical deployment issues.

Q. How does generative AI optimization reduce costs for enterprises?

By simplifying architectures, utilizing effective hardware, and strategically controlling context windows, optimization reduces the requirement for infrastructure. These enhancements lower latency, decrease the frequency of retraining, and use less cloud. As a result, businesses may produce AI outputs more quickly, accurately, and scalably while spending less on computing resources.

Q. Which optimization technique delivers the fastest ROI for businesses?

The fastest return on investment is frequently achieved by fine-tuning domain-specific apps. Businesses reduce training costs and increase relevance by customizing pre-existing generative models to specific use cases. This enables businesses to increase the accuracy of specific tasks—like customer personalization or compliance checks—without having to make significant expenditures in new infrastructure.

Q. How do hardware-aware strategies impact generative AI performance?

Efficiency and speed are ensured by matching workloads to the appropriate infrastructure, such as GPUs, TPUs, ASICs, or edge devices. Over-provisioning is avoided, energy expenses are decreased, and performance is scaled sustainably with hardware-aware optimization. For executives, this means better AI results that meet practical business needs and more efficient investment.

Q. Why should enterprises work with a specialized generative AI development company?

Expert partners like Debut Infotech provide AI model training and optimization services that accelerate adoption. Specialized businesses provide the technical expertise and industry context necessary to transform generative AI from a resource-intensive experiment into a scalable, business-ready growth driver, spanning from process automation to sophisticated optimization systems.

Talk With Our Expert

Our Latest Insights

USA

Debut Infotech Global Services LLC

2102 Linden LN, Palatine, IL 60067

+1-708-515-4004

info@debutinfotech.com

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

info@debutinfotech.com

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-708-515-4004

info@debutinfotech.com

INDIA

Debut Infotech Pvt Ltd

Sector 101-A, Plot No: I-42, IT City Rd, JLPL Industrial Area, Mohali, PB 140306

9888402396

info@debutinfotech.com

Leave a Comment