Table of Contents

Home / Blog / Artificial Intelligence

Machine Learning Knowledge Graph: Use Cases & Benefits

May 28, 2025

May 28, 2025

Machine Learning knowledge graphs are changing how machines interpret and connect data. These graphs provide context-rich frameworks that enhance machine learning models’ accuracy and decision-making capabilities by structuring information into interconnected entities and relationships.

The global knowledge graph market is projected to grow from $1.31 billion in 2024 to $1.62 billion in 2025, reflecting a compound annual growth rate (CAGR) of 23.2%. Additionally, Gartner predicts that by 2025, graph technologies will be used in 80% of data and analytics innovations, up from 10% in 2021. This surge underscores the increasing adoption of knowledge graphs across various industries, including healthcare, finance, and e-commerce.

As data complexity escalates, integrating knowledge graphs with machine learning becomes essential for deriving actionable insights and fostering innovation.

This guide explores what Machine Learning knowledge graphs are, define knowledge graph, how they function, their core components, real-world applications, tools and frameworks, typical machine learning challenges, and the trends shaping their future.

Ready to Build Scalable Knowledge Graphs?

We help growing companies build knowledge graphs that don’t fall apart when the data piles up. Smooth, scalable, and built to last.

What Is a Machine Learning Knowledge Graph?

A machine learning knowledge graph is a structured framework that links data points using graph-based relationships and semantics, allowing machines to understand, infer, and learn from complex data connections. Unlike traditional databases, it organizes information as a network of entities and their interrelations, forming a graph that is easily navigable for learning algorithms. In this setup, machine learning benefits from enriched context, while the knowledge graph gains adaptability through continuous learning.

What Is a Knowledge Graph?

A knowledge graph is a dynamic data structure that captures relationships between entities in a graph format. Using edges and nodes, it is designed to connect the dots between disparate data points—people, places, events, or concepts. The promise of knowledge graphs lies in their ability to bring meaning to data, allowing intelligent systems to interpret and reason over information the way humans do. As these systems evolve, knowledge graphs are expected to play an increasingly vital role in next-generation AI.

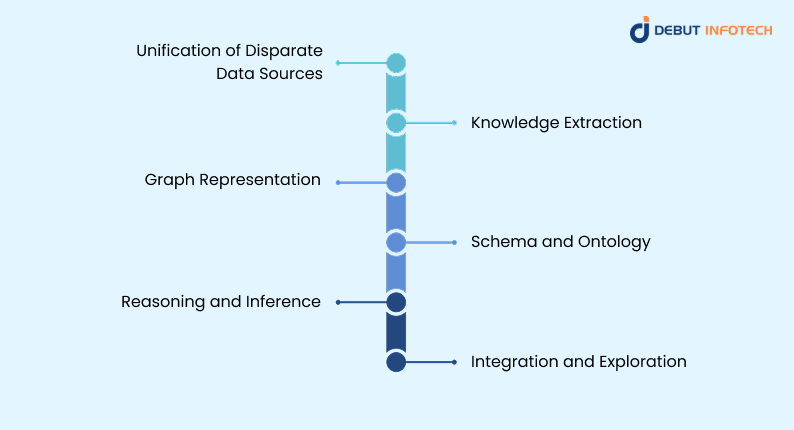

How a Knowledge Graph Works

1. Unification of Disparate Data Sources

Data is often spread across silos—internal databases, external APIs, spreadsheets, and documents. A knowledge graph unifies these disconnected sources into a cohesive structure. By mapping entities from various inputs to a shared ontology, it helps businesses break down data walls, enabling a more complete and accurate foundation for AI and analytics. It’s a foundational step toward building more intelligent systems.

2. Knowledge Extraction

This stage involves identifying and pulling relevant entities and their relationships from structured and unstructured data. Natural language processing (NLP), machine learning, and information retrieval techniques are applied to convert raw data into meaningful nodes and edges. The result is a graph that holds data and contextualizes it for machine interpretation.

3. Graph Representation

Once the knowledge is extracted, it’s stored in a graph data model where each node represents an entity, and edges denote relationships. This representation supports intuitive querying, visualization, and inference. It’s scalable, adaptable, and perfect for systems that need to evolve in real-time with continuous data inflow.

4. Schema and Ontology

Schemas define the structure of data, while ontologies specify the meaning and permissible relationships among entities. Together, they ensure semantic consistency and interoperability across systems. This formal backbone allows AI models to understand not just the data itself, but the domain-specific meaning behind it.

5. Reasoning and Inference

Reasoning adds intelligence to the graph. Using logic-based rules or machine learning inference, the graph can discover new connections not explicitly stated. This leads to predictive capabilities and automated insights, turning passive data into active knowledge that drives smarter decision-making.

6. Integration and Exploration

Knowledge graphs shine when used for integrated exploration of vast, heterogeneous data. Users and algorithms can traverse the graph, explore related entities, and retrieve insights on demand. As organizations scale, this becomes a powerful way to maintain control and visibility over increasingly complex data ecosystems.

Impacts of Knowledge Graphs in 2025

1. Advancing Data Integration and Contextualization

Knowledge graphs empower systems to move beyond simple data storage and into contextual comprehension. By linking disparate data with contextual cues, organizations in 2025 are achieving richer, more integrated views of their information landscapes, fueling operational efficiency and innovation.

2. Improving Predictive Modeling with Richer Data

Predictive models thrive on quality input. Knowledge graphs enhance these models by supplying well-structured, relationship-rich data. This leads to better feature engineering, deeper understanding of dependencies, and improved predictive accuracy, especially in complex domains like healthcare, finance, and logistics.

3. Enabling Real-Time Decision-Making in AI Systems

AI systems increasingly require real-time insights. With knowledge graphs, AI can continuously ingest new data, update relationships, and adjust outputs—all in real-time. This makes them invaluable for high-stakes use cases such as fraud detection, emergency response, and dynamic pricing.

How the Connection Between Knowledge Graphs and Machine Learning Works

The relationship is symbiotic. Machine learning models use knowledge graphs to gain better context, enhance predictions, and reason over data. At the same time, knowledge graphs use machine learning to automate extraction, classification, and relationship mapping. This two-way interaction leads to adaptive, context-aware AI systems that learn and evolve with minimal manual intervention.

Key Features of a Machine Learning Knowledge Graph

1. Interlinked Descriptions of Entities

Entities are connected using meaningful relationships, forming a rich semantic network. These connections allow machines to understand how different concepts relate, enabling more nuanced queries, smarter predictions, and precise recommendations.

2. Formal Semantics and Ontologies

Ontologies provide the rules and semantics that underpin the graph. They define entity classes, relationships, and properties, ensuring interpretability and consistency across systems. This foundation is critical for reliable machine learning outcomes.

3. Scalability and Flexibility

Machine learning knowledge graphs are built to scale. Whether handling a few thousand data points or millions, they offer flexible architectures that adapt to changing data inputs, evolving domains, and expanding workloads.

4. Ability to Integrate Data from Multiple Sources

Modern systems can’t afford isolated data. These graphs excel at harmonizing internal systems, third-party feeds, web data, and more, creating a single source of connected truth that enhances decision-making at every level.

Benefits of Using Machine Learning with Knowledge Graphs

1. Improved Accuracy and Efficiency

Machine learning models benefit from the structured, semantically rich relationships in knowledge graphs, which reduce ambiguity and noise. This leads to higher accuracy, faster training times, and better generalization in predictive tasks, especially when handling large-scale, multi-source, or domain-specific datasets across enterprise environments.

2. Enhanced Understanding of Complex Relationships

Knowledge graphs, machine learning map interdependencies between entities in ways traditional databases cannot. When combined with ML, these insights uncover latent patterns, indirect correlations, and cause-effect relationships, allowing for more informed decision-making in complex domains like biology, finance, or policy analysis where relational context is vital.

3. Ability to Handle Unstructured Data

Integrating machine learning with knowledge graphs allows systems to extract meaning from unstructured sources—emails, documents, social media, etc.—and map them to structured entities. This bridges the gap between text-heavy inputs and structured knowledge, significantly boosting relevance, categorization, and downstream analysis tasks in real-world deployments.

4. Improved Reasoning and Inference

ML models often struggle with logical inference, but knowledge graphs offer a semantic backbone for reasoning over relationships. This enables predictive systems to generate explanations, infer missing data, and simulate outcomes, critical in diagnostics, fraud detection, or autonomous systems where trust and transparency matter.

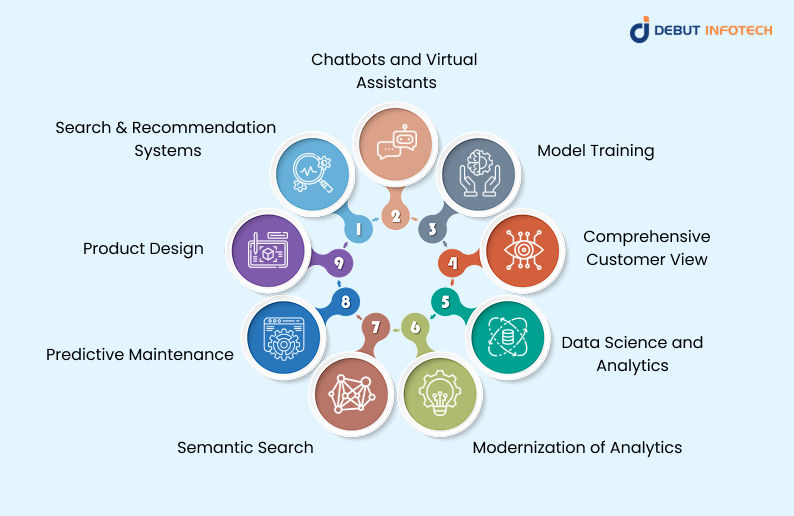

Use Cases for Knowledge Graphs with Machine Learning

1. Search and Recommendation Systems

Machine Learning knowledge graphs power personalized search and recommendation engines by linking user preferences, behaviors, and content metadata. This improves the relevance of suggested products, media, or services. Graph-based representations help identify hidden patterns and contextual connections that standard collaborative filtering methods may overlook.

2. Chatbots and Virtual Assistants

Integrating knowledge graphs allows chatbots to understand user queries with contextual awareness, enhancing dialogue quality and intent resolution. They support dynamic conversations, enable accurate entity linking, and deliver more human-like responses by drawing on structured semantic relationships between topics, users, and conversational context.

3. Model Training

Knowledge graphs enrich training datasets with well-defined relationships and semantic metadata, providing machines with more structured input. This enhances machine learning model accuracy and reduces training noise, especially in domains like healthcare or finance, where entity disambiguation and domain knowledge play a critical role in predictive performance.

4. Comprehensive Customer View

By unifying customer data from various touchpoints—web, email, CRM, social—into a knowledge graph, organizations can build a complete, real-time customer profile. Machine learning uses this graph to segment audiences, predict behaviors, and optimize personalized marketing, loyalty, or support interventions based on inferred preferences.

5. Data Science and Analytics

Graph-structured data enables analysts and data scientists to uncover insights by tracing relationships between entities, such as transactions, behaviors, or interactions. Machine learning layered on top helps classify, predict, or cluster based on complex patterns, improving the accuracy of models in fraud detection, HR analytics, or finance.

6. Modernization of Analytics

Traditional AI tools struggle with contextualizing diverse data types. Knowledge graphs introduce flexibility and semantic richness, allowing machine learning to identify trends, correlations, and outliers across disparate sources. This supports faster and more intuitive decision-making in modern analytics environments, including business operations, supply chains, and risk analysis.

7. Semantic Search

Knowledge graphs enhance search engines by interpreting user intent rather than relying solely on keywords. Machine learning interprets queries semantically, maps them to structured entities, and retrieves results with deeper contextual relevance. This is essential for academic, legal, and medical domains where precision and meaning are crucial.

8. Predictive Maintenance

Industrial systems use machine learning knowledge graphs to correlate sensor data, operational logs, and equipment metadata. These graphs identify early failure indicators and recommend maintenance schedules. This reduces downtime and extends equipment lifespan, particularly in manufacturing, aerospace, and energy sectors where predictive accuracy saves significant costs.

9. Product Design

Design teams use knowledge graphs to aggregate feedback, usage patterns, and market data across platforms. Machine learning identifies gaps, trends, and feature opportunities. This accelerates product innovation, aligns development with real-world user needs, and fosters more responsive design iterations in software, electronics, and consumer goods.

Actionable Strategies for Leveraging Machine Learning Knowledge Graphs

1. Start with a Clearly Defined Ontology

Establish a domain-specific ontology that defines entity types, relationships, and constraints. This ensures consistent data modeling and enables semantic clarity, which is essential for both machine learning algorithms and downstream users to interpret, reason over, and build upon the graph’s structure confidently.

2. Use Graph Embeddings for ML Integration

Apply graph embedding techniques—like Node2Vec, TransE, or GraphSAGE—to convert structured graph data into vectors that ML models can understand. This bridges the gap between graph databases and predictive algorithms, enabling richer features for classification, clustering, recommendation, and anomaly detection tasks across various industries.

3. Automate Graph Enrichment via NLP Pipelines

Deploy NLP pipelines to extract entities, relationships, and attributes from unstructured data sources such as reports, articles, and logs. Automatically feed these insights into the knowledge graph to keep it updated and contextually enriched, improving both its scalability and relevance to evolving machine learning tasks.

4. Incorporate Feedback Loops from ML Outputs

Establish feedback mechanisms where predictions and insights generated by ML models help refine the knowledge graph structure. This creates a self-improving system, where new entity types, relationships, or missing links are detected, enriching the graph’s utility and improving long-term performance of AI applications.

Challenges in Implementing Machine Learning Knowledge Graphs

1. Complexity in Development and Maintenance

Developing machine learning knowledge graphs requires advanced modeling, domain expertise, and scalable architecture.

Solution: Machine learning development companies should use modular graph-building frameworks, automate entity extraction, and adopt graph lifecycle management tools like RDF4J or Neo4j to reduce technical overhead and ensure maintainability.

2. Data Quality and Consistency

Inconsistent, incomplete, or noisy data can distort graph relationships and lead to inaccurate machine learning outputs.

Solution: Implement robust data validation pipelines, use ontology-driven data standardization, and regularly audit graph inputs to maintain reliability and consistency across all integrated sources.

3. Scalability in Large-Scale Systems

Scaling knowledge graphs for big data and real-time applications requires high-performance infrastructure and optimized query execution.

Solution: Leverage distributed graph databases, parallel processing, and cloud-native solutions like Amazon Neptune or TigerGraph to handle high-velocity, high-volume data efficiently.

4. Data Security and Privacy

Sensitive relationships in graphs can expose personal or proprietary information, especially in healthcare, finance, or enterprise systems.

Solution: Apply role-based access controls, encryption at rest and in transit, and anonymization machine learning techniques to protect data integrity while complying with privacy regulations like GDPR or HIPAA.

Future Trends on Machine Learning Knowledge Graphs

1. Integration with Generative AI Models

Knowledge graphs will increasingly support generative AI systems by providing structured context for more accurate, explainable outputs. As LLMs scale, grounding generation in factual, linked data enhances reasoning, reduces hallucination, and enables domain-specific language generation in healthcare, legal, and scientific applications.

2. Autonomous Graph Construction and Updating

Future systems will automate the building and maintenance of knowledge graphs using self-supervised learning, NLP in business, and real-time data streams. This reduces manual effort and enables up-to-date, context-aware graphs capable of supporting dynamic environments like cybersecurity monitoring or rapidly evolving financial markets.

3. Greater Emphasis on Explainable AI (XAI)

Machine learning knowledge graphs will drive advances in explainable AI by making relationships, decision paths, and predictions traceable. This transparency is essential in regulatory environments—healthcare, insurance, and law—where explainability fosters trust, accountability, and compliance with AI governance frameworks.

4. Cross-Industry Standardization and Interoperability

As adoption grows, industries will move toward shared ontologies and standardized graph structures. This ensures compatibility across machine learning platforms, tools, and vendors, encouraging collaboration and smoother data exchange. Especially in sectors like supply chain, finance, and smart cities, where ecosystem-wide knowledge sharing is critical.

Make Your Models Smarter—Fast

Tired of underperforming models? Our team integrates knowledge graphs to enrich learning signals and features.

Conclusion

Machine Learning knowledge graphs are not just a technical advancement—they’re a shift in how machines interpret and reason with data. By bringing structure and context to vast information networks, they enhance explainability, scalability, and adaptability in AI-driven systems. From personalized healthcare to smarter digital assistants, their influence continues to expand.

As technologies mature and integration deepens, knowledge graphs will likely play a central role in making AI more transparent, insightful, and effective across industries. Investing in this space now means staying ahead in the data intelligence game.

FAQs

Q. How to generate a knowledge graph?

A. To generate a knowledge graph, start by extracting structured or unstructured data, identify entities and relationships, and then organize them into a graph format. Use tools like RDF, OWL, or Neo4j. Automated pipelines and ontologies can streamline the process for scalability and accuracy.

Q. What is the difference between GNN and knowledge graph?

A. A knowledge graph is a structured representation of entities and their relationships. Graph Neural Networks (GNNs), on the other hand, are machine learning models designed to analyze graph-structured data, including knowledge graphs, for tasks like classification, prediction, or embedding.

Q. Is a knowledge graph a data model?

A. Yes, a knowledge graph functions as a semantic data model. It represents entities, their properties, and relationships in a graph structure. This enables machines to understand, integrate, and query information meaningfully across diverse domains and data sources.

Q. Is knowledge graph part of NLP?

A. Knowledge graphs are often integrated into Natural Language Processing (NLP) tasks. They enhance understanding, disambiguation, and reasoning in language models by providing contextual knowledge and structured background information relevant to human language.

Q. Are knowledge graphs a part of machine learning?

A. Knowledge graphs are not machine learning models themselves but are frequently used within machine learning workflows. They support tasks like recommendation, reasoning, and classification by providing structured context, improving both model performance and explainability.

Talk With Our Expert

Our Latest Insights

USA

Debut Infotech Global Services LLC

2102 Linden LN, Palatine, IL 60067

+1-708-515-4004

info@debutinfotech.com

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

info@debutinfotech.com

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-708-515-4004

info@debutinfotech.com

INDIA

Debut Infotech Pvt Ltd

Sector 101-A, Plot No: I-42, IT City Rd, JLPL Industrial Area, Mohali, PB 140306

9888402396

info@debutinfotech.com

Leave a Comment