Table of Contents

Home / Blog / Artificial Intelligence

An In-Depth Look at Using GANs for Generative AI Projects

May 27, 2025

May 27, 2025

Have you ever found it amazing that machines can design pictures of imaginary people that look perfectly real?

Thanks to rapid improvements in technology, we are now able to train deep learning and neural networks to make realistic outputs from entirely new data. GAN is one such method, able to create completely new images of faces from inferences drawn from the learnings in its training data. To understand how GANs work in AI, it’s essential to explore their unique two-part structure: a generator that creates synthetic data and a discriminator that evaluates its authenticity.

In the following article, we’ll explain GANs, how they work, the areas they are used in and the significant effect they’re having on different industries. Leading generative AI development companies are already harnessing this technology to build tools for everything from personalized content creation to advanced data augmentation.

Without further ado, let’s delve in!

What Are Generative Adversarial Networks?

Generative Adversarial Networks (GANs) were made public in 2014 by Ian Goodfellow and his colleagues. The aim of GANs as a type of generative modeling is to produce results that are similar to the data they were trained on.

GANs mainly depend on two kinds of neural networks working against each other, the Generator and the Discriminator. These networks are engaged in a game-like situation where they each try to outwit one another. These capabilities make GANs invaluable for Generative AI Integration Services, which leverage such frameworks to build scalable, realistic data solutions for industries like healthcare, entertainment, and finance.

Let’s break down the term GAN:

- Generative: Refers to the ability to model how data is generated, typically using probabilistic methods. It essentially simulates the data creation process.

- Adversarial: The model is trained in a competitive (adversarial) setting, where two networks oppose each other.

- Networks: Deep neural networks are employed to build and train both the generator and the discriminator.

How GANs Work:

- The Generator works with random noise, producing output that is meant to imitate the training data which could include images, text, audio and other types of data.

- The Discriminator studies the samples to try to separate the real data from the fake data that was generated.

During the training process, the generator generates new fake data and the discriminator figures out how to classify them as false. By regularly adjusting the generator, its ability to produce genuine and realistic text gets better.

Unlock GANs’ Power for Realistic AI Generation

Dive into our guide to master synthetic data creation, model training, and ethical deployment. Turn generative potential into actionable innovation.

Why Were GANs Developed?

Introducing just a little bit of noise can cause simple machine learning algorithms and neural networks to misinterpret the data. Such noise stands to increase the chance of wrong results, especially for classifying images.

Therefore, an important question appeared: Is it possible to design a system that lets neural networks learn and illustrate patterns resembling their training data? As a result, Generative Adversarial Networks (GANs) were developed to create very similar synthetic data from the original set used for training. Organizations looking to leverage this innovation often partner with a generative AI development company to implement these systems effectively.

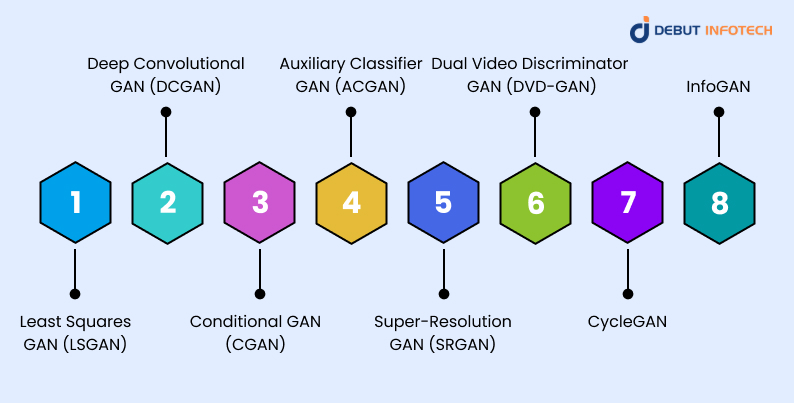

Types of GANs

1. Least Squares GAN (LSGAN)

Unlike normal GANs, LSGAN replaces the binary cross-entropy in the discriminator with a least-squares function. As a result, training is simpler and the remainder improved since the vanishing gradient problem is reduced. LSGANs improve the quality and stability of pictures in fields such as medical imaging.

2. Deep Convolutional GAN (DCGAN)

Among GAN models, DCGAN is considered both popular and powerful. It replaces standard multilayer perceptrons with convolutional neural networks (ConvNets) which function by using convolution in place of max pooling. With this design, the process of pulling out data is enhanced and the training stabilizes. DCGANs are known for creating actual-looking pictures starting from random bits of information.

3. Conditional GAN (CGAN)

CGANs make improvements to GANs for Generative AI by adding additional information such as class labels, to both the generator and the discriminator. For example, you can teach a CGAN to create handwritten digits by labeling “3,” so the output is similar to the number 3.

4. Auxiliary Classifier GAN (ACGAN)

Unlike CGAN, ACGAN has the discriminator job of recognizing real and fake images and estimating the class designated to each image. To illustrate, a model classifying a dataset of animals can tell the generator whether the image is authentic and also confirm it as a dog, cat or bird, so the generator can create respective classes of images.

5. Super-Resolution GAN (SRGAN)

SRGAN uses super-resolution to improve the overall quality of images. It uses a source picture with low-resolution quality and transforms it into a more detailed high-resolution version. For instance, it can improve a low-quality 240p frame into a sharp 1080p frame which helps with generative AI trends focused on enhancing visual data quality for applications like satellite photography and video restoration.

6. Dual Video Discriminator GAN (DVD-GAN)

DVD-GAN is intended for generating videos and is based on the BigGAN architecture. It makes use of two discriminators: one called Spatial discriminator to judge individual images and the other named Temporal discriminator to check motion and sequence, showcasing how generative AI frameworks push the boundaries of dynamic content creation. As an example, DVD-GAN could make a video of a person walking so that the background and movement seem natural throughout the film.

7. CycleGAN

CycleGAN can perform image-to-image translation between different domains, even if there are no corresponding matching images. For example, it could process images of different landscapes taken during the summer and during the winter separately. After training the system, it will be capable of taking a photo of a beach in the sun and changing it to a snowy landscape and vice versa. Businesses aiming to explore such capabilities might consider partnering with generative AI consultants to tailor these models for tasks like film production or architectural visualization.

8. InfoGAN

InfoGAN includes a new information-theoretic component in GANs, helping the network to learn meaningful and separate features from data. With this approach, it can generate pictures of faces where rotation, hair and so on are controlled by simple, unlabeled variables. it highlights how unsupervised learning can drive innovations in personalized content generation, paving the way for more intuitive adaptive AI development in fields like gaming or virtual design.

Applications of Generative Adversarial Networks (GANs)

1. Data Augmentation and Generation

Many GAN models are designed to create fake data that seems very close to real datasets. This is why, in medical fields, GANs help solve this issue by creating new MRI scans to train AI systems when the available data is not enough.

2. Creating Realistic Human Faces

With GANs, it is possible to create lifelike portraits of people who do not actually exist. These computer-created faces, powered by GANs for Generative AI, are included in video games, used during film production, and employed to test facial recognition tools. Such advancements highlight the transformative future of AI in creative industries.

3. Voice Cloning and Music Composition

With a small sample of someone’s voice, GANs can make music and imitate different vocal styles. To illustrate, experts from MIT, IBM Watson and OpenAI created Jukebox, a program that can write full tunes in the style of various artists using vocals, instruments and lyrics.

4. Text-to-Image Synthesis

StyleGAN and AttnGAN can take a brief text description and create a detailed image to match. For instance, if the model is given “a futuristic city skyline at night with glowing blue skyscrapers,” it can create a picture that matches the description, though the image is made up.

5. Character Design for Animation and Gaming

Many anime and video game makers are using GANs to create original characters which has speeded up the way visual stories and games are created. To leverage this innovation, studios often hire generative AI developers who specialize in optimizing these systems for dynamic design workflows.

6. Image-to-Image Translation

This involves changing an image so that its context is not lost. For example, changing an old black-and-white image to one in color or replacing summer with winter in a photo of a landscape, keeping the landscapes and people alike.

7. Super-Resolution Imaging

GANs are able to take low-quality visuals and make them crisper and clearer. It helps a lot with satellite pictures, security recordings and recovering older prints.

8. Video Frame Prediction

After observing a video sequence, a GAN is able to predict what will appear in the next frame. As an illustration, in autonomous driving, this allows for the prediction of where pedestrians or vehicles are headed.

9. Artistic and Interactive Content Generation

GANs for Generative AI are used in the art world for things like producing paintings, abstract art or interactive videos. If proper training data is available, they can make images in a well-known artist’s style or discover new ways to express ideas.

10. Speech Synthesis

GANs have made it easy to produce voices that sound like humans using speech synthesis. One notable example is MelGAN, a text to speech model developed by researchers at Google. With this model, spectrograms that represent sounds can be turned into accurate audio waveforms very quickly.

Key Components of a Generative Adversarial Network (GAN)

1. Generator Network

Generators are in charge of forming new samples that are like the examples in the original dataset. To start, it uses a vector of random values which then creates data, like handwriting, clips of speech or financial sequences. The goal of the generator is to create outputs that look so realistic that the discriminator falls for them.

2. Discriminator Network

The discriminator is designed to separate true data from the training set and fake data made by the generator. The output provides a confidence level indicating the possibility that a provided input is real.

3. Adversarial Learning Dynamics

In generative AI models like GANs, the generator and discriminator ‘compete’ with each other in training GANs. The generator focuses on generating more real-looking data and the discriminator works on identifying any produced data as fake. As a result, each model adjusts its strategies little by little, producing content that looks more realistic as time goes on.

4. Loss Metrics

The generator’s loss assesses the success of its deception on the discriminator by trying to maximize the chances of it mistaking fake for real data. The discriminator’s loss is used to measure how correctly it categorizes real data compared to generated data. They both result in changes and improvements within their networks.

5. Input Noise Vector

The generator involves a random noise vector in its input. Usually, samples in this vector are taken from a Gaussian or uniform distribution and then used to produce innovative and different sequences. As an example, different external noise could influence the generator to make music or create art in a variety of styles.

6. Real vs. Generated Data

Data generated by the GAN is based on authentic examples from areas such as satellite imagery or video recordings. Unlike real data, generated or synthetic data is made by the generator while training and is presented to the discriminator.

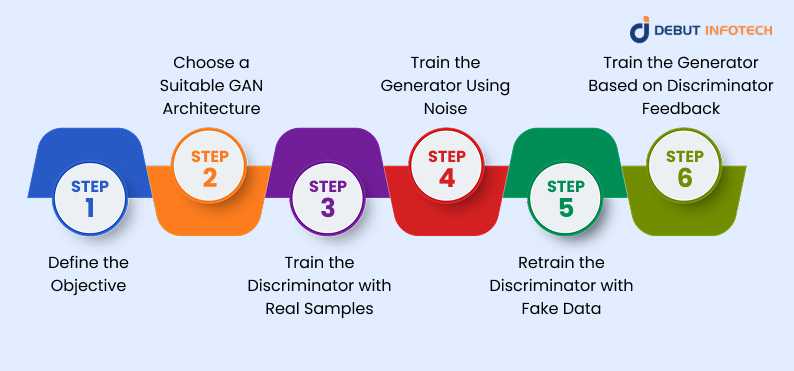

Training and Prediction in Generative Adversarial Networks (GANs)

Now that you understand the geometry behind GANs, we’ll talk about how to train them. We are going to look at the different ways that the Generator and Discriminator are trained within a GAN.

Step 1: Define the Objective

All GAN projects are based on a specific goal from the start. The outcome of your GAN relies heavily on how you define the problem. GANs can be helpful in creating many different outputs such as synthetic speech, paintings from Van Gogh, 3D models or new types of fashion. In all these domains, you must make sure to clarify exactly what kind of data you are generating.

Step 2: Choose a Suitable GAN Architecture

There are different types of GANs like DCGAN, CycleGAN, StyleGAN, all made for unique purposes. If you are producing images of high-resolution human faces, using StyleGAN is a good option. Select the architecture that is most appropriate for your problem and the data available.

Step 3: Train the Discriminator with Real Samples

At this point, the Discriminator is taught to distinguish real data from fake data. It receives real examples from the original data such as actual handwriting. No backpropagation is used through the Generator during this training stage.

- The Discriminator predicts whether each input is real or fake.

- A loss function (e.g., Binary Cross-Entropy) penalizes incorrect predictions.

- Weights are updated using the Discriminator’s loss to enhance its accuracy.

Step 4: Train the Generator Using Noise

After that, we train the Generator by giving it random noise as input and hoping it will output images that are similar to real ones. It may, for example, produce handwritten digits based on random vectors.

Here’s how it works:

- Sample random noise (e.g., a vector from a normal distribution).

- Pass it through the Generator to produce a fake data sample.

- Feed the generated sample to the Discriminator to get a prediction.

- Use the Discriminator’s output to compute the Generator’s loss (which aims to make the Discriminator classify its output as real).

- Perform backpropagation through both models but update only the Generator’s weights.

Step 5: Retrain the Discriminator with Fake Data

After making the fake samples, train the Discriminator with the Generator’s output as the negative examples they have to detect.

- It tries to distinguish between the genuine data and the fake samples.

- The Discriminator’s weights are updated to better identify artificially generated inputs.

Step 6: Train the Generator Based on Discriminator Feedback

Based on what the Discriminator sees as fake data, the Generator knows if it is performing correctly or not. The Generator uses the given inputs and corrections to make its work appear more realistic.

GANs Overwhelming Your Workflow? Let’s Simplify.

Our AI engineers tailor solutions for seamless data generation, model tuning, and project scaling. Get started with ease.

Final Thoughts

Generative Adversarial Networks (GANs) are at the forefront of machine learning because of their many uses. GANs create very realistic data, support progress in image processing and help with exciting ideas in creative fields. While challenges like mode collapse and training instability remain, continued research and careful implementation are helping to discover new things.

Using the suitable method, GANs are able to revolutionize industries, as proven by their uses with the MNIST dataset in collaboration with an AI development company.

Feeling up to trying out GANs? Start by investigating and finding out what is coming next in machine learning.

Frequently Asked Questions (FAQs)

Q. What is a GANs in AI?

A. Generative Adversarial Networks (GANs) are a fascinating advancement in the field of machine learning. As generative models, GANs are designed to produce new data samples that closely mimic the original training data. For instance, they can generate realistic images of human faces, even though the individuals depicted do not actually exist.

Q. Is GAN the same as generative AI?

A. A Generative Adversarial Network (GAN) is a type of machine learning framework widely used for developing generative artificial intelligence systems.

Q. Which among the following is best accomplished using generative adversarial networks (GANs)?

A. Generative Adversarial Networks (GANs) are widely used in various AI applications, including machine learning-driven image creation, video synthesis, and text generation, particularly in areas like natural language processing (NLP).

Q. Do GANs need a lot of data?

A. To effectively train GANs, a substantial amount of real training data is generally needed. For applications like generating images of faces, cats, dogs, or landscapes, such data is typically easy to obtain. It’s worth noting that a more practical application of GANs is to produce new image samples that can be used to support other tasks, such as image classification.

Talk With Our Expert

Our Latest Insights

USA

Debut Infotech Global Services LLC

2102 Linden LN, Palatine, IL 60067

+1-708-515-4004

info@debutinfotech.com

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

info@debutinfotech.com

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-708-515-4004

info@debutinfotech.com

INDIA

Debut Infotech Pvt Ltd

Sector 101-A, Plot No: I-42, IT City Rd, JLPL Industrial Area, Mohali, PB 140306

9888402396

info@debutinfotech.com

Leave a Comment