Table of Contents

Home / Blog /

July 1, 2025

July 1, 2025

Machine learning is reshaping industries by enabling systems to learn from data and improve over time without explicit programming. From powering virtual assistants to detecting fraud and predicting medical outcomes, the real-world impact is immense. Per a McKinsey report, 55% of organizations have adopted AI in at least one function. In addition, Statista forecasts global AI market revenue to reach over $1.3 trillion by 2032.

For businesses and professionals desiring to stay ahead in a data-driven world, it’s crucial to understand the types of ML, how it works, and the machine learning benefits and challenges.

In this guide, we will break down each of these areas, including key advancements that are shaping the future of intelligent technologies.

Turn Business Problems into Predictive Models

Do you have a recurring challenge? Let’s turn it into a machine learning use case and solve it with automation and intelligence.

What is Machine Learning?

Machine Learning (ML) is a subcategory of Artificial Intelligence that lets systems to learn from data, discover patterns, and make decisions without direct human input.

Instead of being specifically programmed to do a task, ML systems are trained on an algorithm to learn and improve from experience. The process is iterative and data-driven, so it applies to many different sectors - health, finance, manufacturing, marketing, and beyond.

Types of Machine Learning

Before we delve into the types, it is worth noting that different learning models are suited to different problem sets.

Each has its own strengths and recommended use cases depending on how much-labeled data you have and what the task is.

1. Supervised Learning

Supervised learning uses labeled datasets to train algorithms. The model learns from input-output pairs, and then uses what it has learned to predict the output for new, unseen pairs. It works well for classification and regression tasks such as email filtering or predicting stock prices.

2. Unsupervised Learning

Unsupervised learning deals with unlabeled data. It helps uncover hidden patterns or structures within datasets. Clustering and dimensionality reduction are typical applications, making this approach powerful for market segmentation or anomaly detection.

3. Reinforcement Learning

Reinforcement learning enables models to make sequences of decisions by rewarding positive outcomes and penalizing mistakes. Commonly used in robotics, game AI, and self-driving cars, it trains agents to maximize cumulative rewards over time through trial and error.

4. Semi-Supervised Learning

Semi-supervised learning blends a small amount of labeled data with a large pool of unlabeled data. This approach is advantageous when labeling is expensive or time-consuming, but access to raw data is abundant.

Related Read: Exploring Top 10 Machine Learning Development Companies of 2025

History of Machine Learning

The idea of machine learning can be traced back to the 1950s. Alan Turing’s work on computing laid foundational ideas. In 1959, Arthur Samuel deriived the term “machine learning” while developing a program to play checkers.

Through the decades, ML evolved with milestones like decision trees in the 1980s, support vector machines in the 1990s, and neural networks making a major comeback in the 2010s with the rise of deep learning and big data.

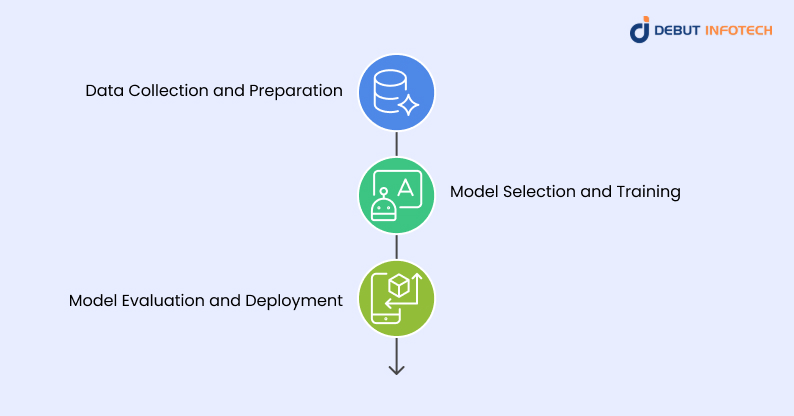

How Does Machine Learning Work?

Machine learning involves a structured pipeline of tasks, from sourcing data to making intelligent decisions. Here’s how it works:

1. Data Collection and Preparation

Data collection is the first step of a machine learning project, where structured and unstructured data are collected from multiple sources — APIs, sensors, logs, and databases. The raw data is then cleaned for duplicates, errors, and inconsistencies.

Preprocessing also consists of normalizing, feature selection and dealing with missing values. Without clean, meaningful data, even the most sophisticated algorithms cannot make accurate predictions. Additionally, data needs to be labeled when necessary, especially when supervised learning is involved.

2. Model Selection and Training

This phase involves selecting an appropriate algorithm—like decision trees, support vector machines, or neural networks—based on the problem type and dataset characteristics. The training process feeds data into the model, allowing it to recognize patterns and optimize parameters through multiple iterations.

Algorithms apply optimization methods like gradient descent to minimize error. It’s essential to use training, validation, and test datasets separately to ensure the model generalizes well to unseen data.

3. Model Evaluation and Deployment

After training, the model’s performance is assessed using evaluation metrics tailored to the task—accuracy, F1 score, precision-recall, or AUC-ROC. Overfitting and underfitting are checked by comparing results on training versus test data.

Once validated, the machine learning model is deployed into production environments using APIs or embedded systems. Continuous monitoring post-deployment is vital to track performance drift, and periodic retraining is often required to adapt to new data or changing conditions.

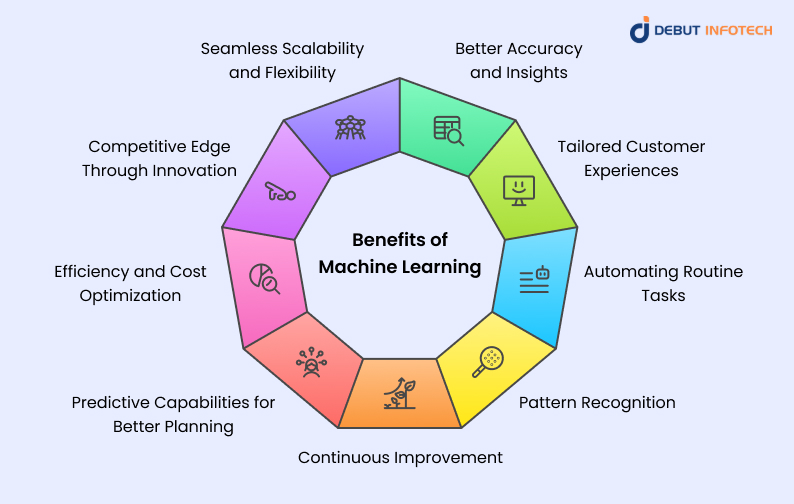

What are the Benefits of Machine Learning?

Here are the benefits of machine learning for organizations:

1. Better Accuracy and Insights

ML algorithms are good at finding hidden patterns or complex relationships between variables, which normal analytics tools might not see. As a result, they deliver predictions and insights with high accuracy, which supports more intelligent decision-making in finance, healthcare, logistics, and much more.

Since it can learn from historical trends and continually update results, this ensures that the model’s output remains relevant and reliable as new data becomes available.

2. Tailored Customer Experiences

Machine learning personalizes digital experiences by analyzing user behavior, preferences, and interactions. It powers recommendation engines on platforms like Netflix and Amazon, delivers dynamic website content, and informs targeted marketing campaigns. This personalization improves customer satisfaction, boosts engagement, and ultimately drives loyalty and conversions.

Businesses using ML can build a deeper, data-driven knowledge of their customers’ needs and deliver more meaningful, timely experiences across multiple touchpoints.

3. Automating Routine Tasks

ML automates repetitive, rule-based tasks such as data entry, invoice classification, fraud detection, and inventory checks. This improves operational efficiency and decreases the risk of human error.

Automating mundane work allows employees to focus on higher-order tasks like strategy, problem-solving, and customer service. Over time, automation contributes to faster workflows, lower operational costs, and a more responsive organization capable of scaling with minimal resource strain.

4. Pattern Recognition

Pattern recognition lies at the heart of machine learning’s strength. It allows models to detect trends, correlations, and anomalies within vast datasets—sometimes in real-time.

Whether it’s flagging fraudulent transactions, diagnosing medical anomalies, or spotting network intrusions, ML systems identify subtle deviations that humans might miss. These capabilities are instrumental in high-volume environments where traditional manual monitoring would be impractical or inefficient.

5. Continuous Improvement

Machine learning models improve with exposure to new data, allowing them to refine their predictions over time. This iterative learning process means that models can adapt to changing trends and user behaviors without being reprogrammed from scratch.

Businesses benefit from smarter systems that keep pace with evolving environments, leading to higher performance and long-term cost-efficiency. Continuous learning also reduces the need for manual intervention, boosting long-term model sustainability.

6. Predictive Capabilities for Better Planning

ML enables accurate forecasting by identifying historical trends and projecting future outcomes. Businesses can use these insights for inventory management, demand forecasting, equipment maintenance, and risk mitigation.

Predictive models help reduce uncertainty and support proactive planning, whether it’s anticipating customer churn or optimizing supply chains. With more informed decision-making, organizations can stay ahead of market changes and respond swiftly to emerging challenges or opportunities.

7. Efficiency and Cost Optimization

By automating complex calculations and repetitive tasks, machine learning drastically reduces time and labor costs. Businesses gain the ability to handle large-scale operations more efficiently without proportionally increasing headcount.

Moreover, machine learning platforms reduce error rates and enhance precision in quality control, logistics, and financial reporting. These efficiencies translate into measurable savings, streamlined workflows, and better use of internal resources, particularly in competitive industries.

8. Competitive Edge Through Innovation

Machine learning in business Intelligence empowers businesses to innovate by building intelligent, responsive systems that can identify market gaps and react quickly to customer needs.

From intelligent product recommendations to real-time fraud detection, companies that leverage ML often deliver more innovative services faster than their competitors. This agility allows them to set trends rather than follow them, reinforcing brand strength and maintaining a forward-thinking market position.

9. Seamless Scalability and Flexibility

Machine learning systems are built to scale—whether it’s handling millions of user queries or analyzing terabytes of real-time data. Cloud-based ML infrastructure supports dynamic scaling based on demand, helping organizations grow without infrastructure bottlenecks.

Moreover, these systems can be fine-tuned or retrained to meet evolving needs. The adaptability of ML makes it a sustainable solution for startups and enterprises looking to future-proof their operations.

Read also This Blog: Machine Learning App Development: A Business Guide 2025

Challenges of Machine Learning

Despite its potential, implementing machine learning at scale comes with distinct hurdles that demand strategic planning and ongoing attention. Here are some machine learning challenges:

1. Data Quality and Quantity Requirements

ML models depend on high-quality data for effective training. Incomplete, noisy, or biased datasets lead to inaccurate outcomes, misclassifications, or unfair predictions.

Additionally, some algorithms require vast amounts of labeled data—especially in supervised learning—which may not always be readily available. Gathering sufficient, diverse, and well-labeled data is time-consuming and resource-intensive. Yet, it is essential to ensure robust and generalizable model performance.

2. High Computational Costs

Training and deploying complex machine learning models—especially deep learning architectures—demands substantial processing power, memory, and storage. These requirements often necessitate expensive GPUs, distributed computing environments, or high-end cloud services.

For smaller organizations or research teams, the costs can be prohibitive. Balancing performance with affordability is a key challenge, especially as models become increasingly large and resource-hungry.

3. Complexity and Lack of Interpretability

Many advanced ML models, particularly deep neural networks, function as black boxes, delivering predictions without clear insight into their decision-making processes. This lack of transparency can be problematic in high-stakes fields like healthcare or finance, where explainability is critical.

Interpreting these models requires specialized tools and machine learning techniques, and even then, full understanding may be limited. Trust and regulatory compliance become harder to achieve when outcomes can’t be easily explained.

4. Ethical Concerns and Bias

ML systems can unintentionally perpetuate societal biases if the training data reflects skewed or discriminatory patterns. This can lead to unfair outcomes in areas like hiring, policing, or lending. Without rigorous auditing and diverse datasets, models may reinforce inequalities rather than eliminate them.

Ethical implementation requires clear accountability frameworks, inclusive data practices, and ongoing vigilance to ensure fairness and social responsibility.

5. Security Vulnerabilities

Machine learning models can be susceptible to attacks like adversarial inputs—specially crafted data points that manipulate outputs—or data poisoning, where training data is maliciously altered. These vulnerabilities can compromise system integrity, user safety, and data privacy. As ML becomes more widely deployed, securing models against such threats is paramount, especially in domains like cybersecurity, finance, or national infrastructure.

6. Skill Gap and Expertise Dependency

Developing, deploying, and maintaining machine learning solutions requires specialized skills in data science, software engineering, and domain expertise. However, there’s a global shortage of qualified professionals, creating a bottleneck for organizations seeking to adopt ML.

Additionally, overreliance on a small number of experts can delay project timelines or introduce knowledge silos. Investing in upskilling and cross-functional collaboration is key to overcoming this hurdle.

7. Job Displacement

Automation through machine learning can lead to the displacement of roles that involve repetitive or process-driven tasks. Industries like manufacturing, logistics, and customer service are particularly affected.

While new roles are being created, such as data engineers or AI ethicists, the transition requires significant reskilling initiatives. The human impact must be carefully managed to ensure a fair shift in labor dynamics and long-term workforce resilience.

8. Overfitting

Overfitting occurs when a model performs well on training data but poorly on new, unseen data. This happens when the model learns noise or irrelevant patterns instead of general trends. Overfitted models lack flexibility and fail to generalize, undermining their practical utility.

Techniques like cross-validation, regularization, and dropout are essential to combat this issue and ensure robust, real-world performance.

The Future of Machine Learning

Here are three key developments shaping the future of machine learning.

1. Increased Use of Large-scale Language Models

Large-scale language models like GPT, BERT, and Claude are transforming how machines understand and generate human language. These models are trained on billions of parameters, enabling nuanced comprehension, summarization, translation, and content generation.

As computational resources improve, their accuracy, contextual awareness, and application scope are expected to grow. From legal research to medical diagnostics, these models are being integrated into enterprise workflows. They are redefining automation and expanding the boundaries of what machines can understand and communicate.

2. Advances in Transfer Learning

Transfer learning enables models trained on one task to adapt quickly to new, related tasks using minimal data. This significantly reduces training time and resource costs while improving model generalizability. It’s especially valuable in domains with limited labeled data, such as medical imaging or environmental monitoring.

Future applications will likely see transfer learning integrated more seamlessly into industry workflows, accelerating AI adoption in specialized fields and demand for machine learning development services. This shift promises more agile development and smarter deployment across sectors with niche requirements.

3. Growth of Explainable AI

Explainable AI (XAI) is gaining traction as organizations demand greater transparency in decision-making systems. Instead of black-box outputs, XAI tools help stakeholders understand how and why a model arrived at a decision. This is crucial for ethical AI deployment in sectors like finance, healthcare, and law.

Related Read: In-depth Guide to Machine Learning Consulting for 2025

As regulations tighten and trust becomes a non-negotiable factor, XAI will become a standard in ML pipelines—bridging the gap between algorithmic efficiency and human interpretability without compromising performance or compliance.

Let’s Build Smarter Systems Together

Partner with us to turn your data into decisions. We help businesses design machine learning solutions that are practical, scalable, and ready for real-world use.

Conclusion

As machine learning grows, it unlocks more intelligent automation, personalized experiences, and deeper insights across sectors. However, it also introduces new challenges around bias, explainability, and data ethics.

By understanding the machine learning benefits and challenges, as well as how models are built and deployed, businesses and professionals can make more informed, future-ready decisions.

With ongoing advancements in explainable AI, large-scale models, and transfer learning, the future of ML looks both promising and transformative for every data-driven industry.

FAQs

A. Most companies struggle with messy or limited data, not having the right talent and unclear goals. It’s also tough to scale prototypes into real products. Integrating machine learning with existing systems can be a technical headache if you don’t plan ahead.

A. It’s not magic—ML can be pretty accurate, but only if the data is solid. It struggles with rare events, noisy inputs, or stuff it hasn’t seen before. And sometimes, the results make no sense unless someone digs into why the model did what it did.

A. They matter a lot. Garbage in, garbage out—if your data is full of errors, your model will learn all the wrong things. And if there’s not enough of it, the system won’t generalize well. Clean, diverse, and well-labeled data is the real MVP.

A. It adds up fast—data storage, cloud computing, model training, plus hiring or upskilling staff. You might also need tools, platforms, and infrastructure. It’s not just a one-time cost; keeping things running and updated takes time and money.

A. Start by upskilling your current team through training or certifications. Bring in consultants or partner with ML experts if needed. Hiring can be slow, so combining internal growth with external support usually works best. Don’t wait for unicorns—build talent gradually.

Talk With Our Expert

USA

2102 Linden LN, Palatine, IL 60067

+1-708-515-4004

info@debutinfotech.com

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

info@debutinfotech.com

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-708-515-4004

info@debutinfotech.com

INDIA

Debut Infotech Pvt Ltd

Sector 101-A, Plot No: I-42, IT City Rd, JLPL Industrial Area, Mohali, PB 140306

9888402396

info@debutinfotech.com

Leave a Comment