Table of Contents

Home / Blog / Artificial Intelligence

Generative AI Security Essentials: What Must Know Before Adoption

June 17, 2025

June 17, 2025

Generative AI security is an increasingly urgent issue in businesses as organizations consider utilizing the capabilities of potent AI systems that can create text, images, and code, among other things. Although generative AI models represent a game-changing technology, automating content generation and design processes or enabling intelligent assistants, they come with risks, including data poisoning, model stealing, or using such models for malicious purposes.

Companies should adopt generative AI security best practices, such as secure model deployment, effective governance, and monitoring, to adopt it safely. This is a guiding text that will help you navigate the technical, ethical, and business considerations needed to keep your enterprise safe and responsible when using generative AI.

Secure Your AI Innovation with Confidence

Looking to implement secure generative AI solutions tailored to your business needs? Trust Debut Infotech’s expertise in enterprise-grade AI security and deployment.

Understanding Generative AI & Its Security Landscape

What Is Generative AI?

Generative AI uses algorithms like generative adversarial networks (GANs) and large language models to create new content based on learned patterns. That is text generation, image synthesis, and code writing, among others. The systems are being facilitated by advanced generative AI models, and are revolutionising industries, whether it be an automatic draft of a report, a realistic design prototype, or even a text-to-speech conversion.

Why Security Matters

Despite its transformative potential, generative AI poses serious security threats:

- Data exposure: Models trained on sensitive or proprietary data can inadvertently regenerate confidential information.

- Adversarial manipulation: Malicious data input can mislead models or degrade performance.

- Model theft and misuse: Attackers may attempt to steal or replicate your models to launch phishing campaigns or defraud users.

- Ethical and compliance risks: Generated content may be biased, misleading, or unlawful, creating reputational and legal hazards.

Understanding and mitigating these risks is crucial for enterprises integrating generative AI in production.

Read Also This Blog: Exploring the Role of Generative AI in Data Quality.

Key Threats to Generative AI Systems

As powerful as generative AI models have become, they are equally vulnerable to a range of sophisticated threats. Understanding these security risks is essential for any enterprise considering integration. Below are the most pressing threats facing generative AI systems today:

1. Data Poisoning

Generative AI models learn from vast datasets, and when that data is compromised—either through malicious tampering or flawed collection processes—the entire model becomes unreliable. Attackers can inject biased, toxic, or misleading inputs during the training phase. These subtle manipulations may not be detectable at first but can lead to dangerous behaviors, such as generating harmful content or embedding backdoors into outputs. For businesses, data poisoning can corrupt internal systems, mislead decision-making, and damage brand trust.

2. Model Extraction & Theft

Many enterprises deploy generative AI through APIs or SaaS platforms, leaving them open to model extraction attacks. In this case, an attacker makes repeated requests to a generative AI API to reverse-engineer and reproduce the model behind it. This intellectual property protection can be removed since the stolen model can be used for other purposes without permission. Worst still, it can be modified to contain misleading content or injected with malicious functionality, spelling doom to the reputation and user base of the original provider.

3. Adversarial Attacks

Adversarial examples are particularly constructed inputs that make generative AI systems fail. These inputs might be normal to a human being but would be programmed to cause unpredictable or unsafe manipulation of the model outputs. For example, an adversary can compel a generative AI to generate sensitive internal information, hallucinate false statements, or create banned material. An adversarial attack may result in serious compliances and operational breaches in an industry like healthcare or finance.

4. Content Misuse

Generative AI may create anything, including lifelike pictures, voice doubles compelling text, and code. In the absence of appropriate governance, it can be utilized by bad actors to create deepfakes, generate phishing emails, or automate misinformation campaigns. This type of content abuse is not only a security concern but also a big reputational and ethical challenge. Companies not implementing prevention measures may be linked with fraud, misinformation, or online impersonation.

5. Model Inversion & Data Leakage

Model inversion is another emerging issue, in which an attacker can take outputs and gain sensitive information about what was used in training- such as user credentials, medical records, or valuable business secrets. This can be a severe problem in production-scale models which are trained with confidential or regulated data. The ability of attackers to reconstruct private inputs, even partially, can lead to data exposure or regulatory fines as frameworks such as GDPR or HIPAA.

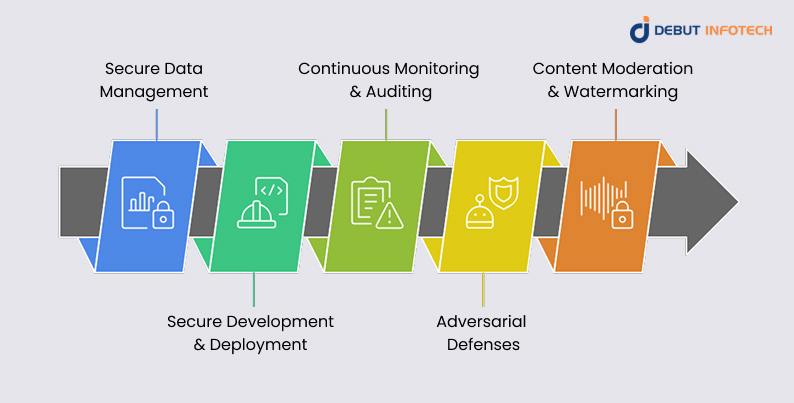

Generative AI Security Best Practices

A. Secure Data Management

- Use vetted, high-quality datasets. Remove sensitive or proprietary information before training.

- Monitor data drift and retrain regularly to prevent stale or biased input.

- Deploy differential privacy to hide individual data traces in model outputs.

B. Secure Development & Deployment

- Encrypt models and data at rest and in transit.

- Use secure enclaves or hardware (e.g., trusted execution environments) for model hosting.

- Restrict model access through API keys, authentication, and rate limiting.

C. Continuous Monitoring & Auditing

- Deploy anomaly detection to spot malicious inputs or unusual API usage patterns.

- Log judgments and model outputs for traceability and compliance.

- Perform regular penetration testing to stress-test safeguards and response procedures.

D. Adversarial Defenses

- Use adversarial training to make models robust against crafted inputs.

- Sanitize input data to filter out suspicious or malicious patterns.

- Add detection layers to reject suspect or manipulated requests.

E. Content Moderation & Watermarking

- Implement output filters to detect harmful or protected content.

- Embed digital watermarks to authenticate content and prevent misuse.

- Establish usage policies outlining acceptable AI-generated content and enforcing consequences for violations.

Organizational Controls & Governance

As enterprises scale their use of generative AI, establishing robust governance and oversight mechanisms becomes crucial. Without strong organizational controls, the risks associated with generative AI security—ranging from data misuse to regulatory non-compliance—can quickly escalate. To safeguard both business operations and reputational standing, enterprises must develop a structured approach to building, deploying, and managing generative AI systems.

Generative AI Integration Services

One of the most efficient ways to ensure secure and scalable generative AI adoption is by working with professional Generative AI Integration Services. These teams are well-versed in embedding security protocols at every layer of the AI pipeline, right from data ingestion to deployment. Generative AI consultants can assist in aligning the technology stack with your enterprise’s security policies, privacy requirements, and operational frameworks, all while leveraging the latest generative AI frameworks and models securely and responsibly.

Vendor & Developer Oversight

If your organization chooses to hire generative AI developers or work with external generative AI development companies, it’s essential to vet their approach to security, ethics, and transparency. Development teams must follow secure coding standards, document all workflows, and offer full visibility into how generative AI models are trained and deployed. This becomes even more important in regulated industries such as finance, healthcare, and government, where the cost of a security lapse can be immense.

An effective governance model should also include collaboration with an experienced AI development company or SaaS development company that can implement enterprise-level controls and reporting mechanisms. These vendors should support audit trails, access control, and encryption to ensure secure deployment and usage of generative AI technologies.

Risk Assessment & Oversight

Security isn’t a one-time setup—it’s an ongoing responsibility. Enterprises must continuously monitor generative AI trends and evaluate how emerging threats could impact their implementations. This means performing regular risk assessments, threat modeling, and internal audits. Organizations can leverage third-party security evaluations, penetration tests, and red-teaming exercises to uncover vulnerabilities before attackers do.

Integrating continuous monitoring and feedback loops also helps businesses refine model performance, reduce hallucinations, and maintain alignment with evolving compliance mandates.

Ethical & Legal Considerations

In addition to technical controls, businesses need to implement a clear ethical framework to govern the responsible use of generative AI. This includes avoiding the generation of harmful, biased, or misleading outputs, as well as enforcing content moderation pipelines. For instance, when working with text to speech models or generative adversarial networks, enterprises must ensure these tools are not used for impersonation or disinformation.

Moreover, legal safeguards such as copyright compliance, data privacy, and informed consent should be prioritized, especially when generating content involving identifiable individuals or sensitive locations. This protects your brand and customers from unintended violations and helps you comply with global regulations.

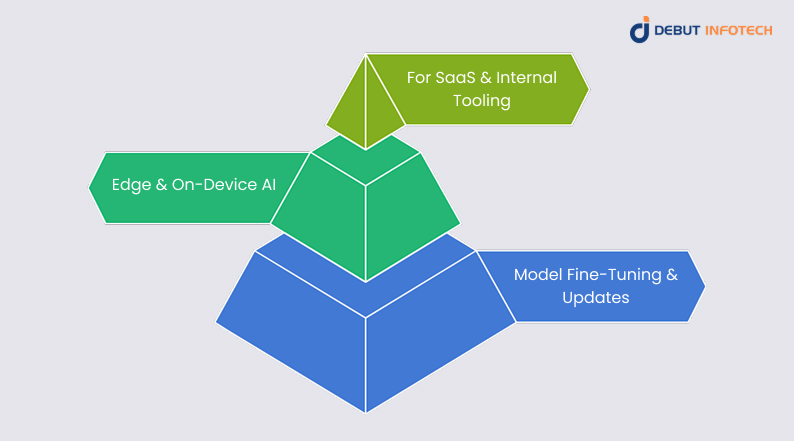

Secure Deployment with Best-in-Class Frameworks

Deploying generative AI models securely is not just about choosing the right tools—it’s about embedding security throughout the deployment lifecycle. From cloud-based SaaS platforms to edge AI systems, maintaining airtight control over model performance, access, and data integrity is vital. Here’s how enterprises can achieve secure, scalable, and responsible deployment using industry-leading frameworks and practices.

For SaaS & Internal Tooling

Partnering with a reliable SaaS development company can provide a security-first foundation when implementing generative AI in SaaS products or internal enterprise tools. This includes:

- Encrypted APIs: Ensure all communication between services is encrypted using TLS 1.3 or higher to prevent man-in-the-middle attacks.

- Strong Identity and Access Management (IAM): Role-based access controls (RBAC), multi-factor authentication (MFA), and least-privilege principles are essential to prevent unauthorized access to AI models and their outputs.

- Vulnerability Scans & Compliance Audits: Before deployment, conduct thorough vulnerability assessments and penetration testing. Continuous scanning for new threats post-deployment helps maintain a secure environment.

These controls are particularly critical when deploying generative AI tools that produce public-facing outputs or sensitive business content, making it essential to enforce high standards of data privacy and system resilience.

Edge & On-Device AI

Generative AI is increasingly being embedded in edge devices through adaptive AI development, powering real-time, context-aware applications such as text-to-speech models and mobile assistants. While these deployments reduce latency and dependency on cloud infrastructure, they also introduce new security challenges.

To mitigate these risks:

- Local Model Security: Ensure the AI model stored on the device is encrypted and protected with device-specific keys.

- Firmware Validation: Implement secure boot processes and firmware integrity checks to prevent model tampering at the hardware level.

- Sandboxing & Isolation: Run models within secure environments to isolate them from the rest of the system and reduce attack surfaces.

These techniques help ensure that generative models deployed on personal or industrial devices remain trustworthy, even if the underlying infrastructure is exposed to hostile environments.

Model Fine-Tuning & Updates

As generative AI evolves, continuous improvement through model fine-tuning is common—but it must be done securely to avoid introducing risks:

- Secure Update Pipelines: Automate model patching and deployment through CI/CD pipelines that include rigorous security gates and access controls.

- Model Drift Monitoring: Actively monitor for performance degradation or concept drift in deployed generative AI models. Unexpected behavior may signal adversarial manipulation or degraded accuracy.

- Verified Training Data: Always retrain models using curated datasets that have been vetted for bias, security, and legal compliance. Avoid using scraped or publicly unverifiable content.

- Model Lineage Tracking: Use metadata tracking tools to record how each version of the model was trained, fine-tuned, and deployed. This allows for full auditability and rollback if needed.

These practices improve the robustness and compliance of AI systems and support long-term scalability as businesses expand their use of generative AI.

Related Read: Generative AI in Manufacturing: A New Era of Intelligent Production

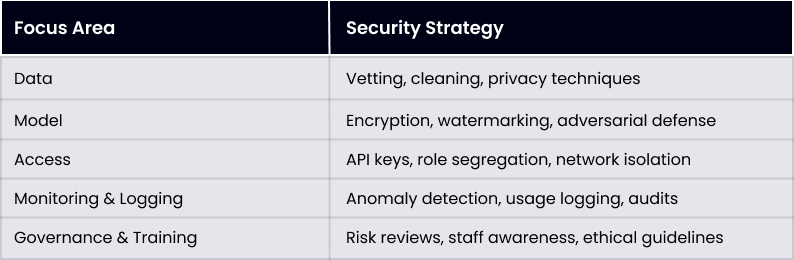

Practical Generative AI Security Framework

Examples Across Industries

Finance

Financial firms use generative AI for risk modeling and report generation. Security includes encrypted pipelines, fine-grained logs, and continuous compliance reviews.

Healthcare

Medical document generation demands strict privacy and compliance. Training with de-identified data and applying explainable AI principles ensure safe usage.

Media & Marketing

Brands using AI-generated content deploy watermarking, plagiarism checks, and filters to maintain brand integrity and control over artificial content.

The Role of Emerging Generative AI Technologies

- Generative adversarial networks are used for watermark or anomaly detection but also need anti-spoofing safeguards.

- Generative AI frameworks like TensorFlow and PyTorch are integrating security modules and secure inference.

- Future of AI systems will offer explainable, secure, and adaptive models that evolve with enterprise environments.

Preparing Securely for Generative AI Implementation

- Conduct risk assessments tailored to generative AI’s unique threats.

- Define security policies specific to model training, data handling, and deployment.

- Train staff on attack vectors and encourage reporting of anomalies.

- Run pilot projects, monitor performance, and adjust security posture iteratively.

- Partner with experts—whether AI development company, consultants, or legal advisors—for robust model governance.

Partner with Experts in Generative AI Security

From model protection to ethical deployment, our team ensures your AI projects are future-proof and fully compliant.

Conclusion

Enterprises integrating generative AI must balance innovation with responsibility. Generative AI security is essential to protect data, models, and users while enabling powerful applications in content creation, automation, and insights. By adopting layered security, continuous governance, and secure-by-design development, organizations can safely leverage generative AI’s full potential.

Staying ahead of emerging threats—from adversarial hacking to output misuse—will be crucial as these systems evolve. Combining proactive monitoring, expert oversight, and secure deployment in your AI strategy ensures enterprises can thrive in a world increasingly shaped by intelligent, generative machines.

Frequently Asked Questions

Q. What is generative AI security, and why is it important for enterprises?

A. Generative AI security refers to the measures and strategies used to protect generative AI models, data, and systems from threats such as adversarial attacks, data leakage, model theft, and misuse. For enterprises, securing generative AI is critical to prevent reputational damage, regulatory violations, and unauthorized use of intellectual property.

Q. How can enterprises protect their generative AI models from being stolen or replicated?

A. Enterprises can protect their models by implementing API rate limiting, watermarking model outputs, obfuscating architecture, and using encrypted endpoints. Partnering with a trusted generative AI development company and following generative AI security best practices also ensures model confidentiality and protection from extraction attacks.

Q. What are the risks of using public or unverified datasets to train generative AI models?

A. Using public or low-quality datasets increases the risk of data poisoning, bias, and intellectual property violations. Compromised training data can produce misleading or harmful outputs. Enterprises should only use verified, consented datasets and regularly audit for accuracy and security.

Q. How does integrating generative AI impact SaaS platform security?

A. When integrating generative AI into SaaS platforms, enterprises must adopt secure development practices such as encrypted APIs, strong IAM policies, and routine vulnerability assessments. Collaborating with a SaaS development company ensures a secure and scalable deployment that aligns with enterprise-level compliance.

Q. Can smart devices using generative AI be secured against tampering or data leakage?

A. Yes. Devices using adaptive AI development and text-to-speech models can be secured using techniques like model encryption, firmware validation, and sandboxing. These controls help protect on-device AI models from manipulation or unauthorized access in edge computing environments.

Q. What role do generative AI consultants play in securing enterprise AI projects?

A. Generative AI consultants help enterprises assess risk, define governance policies, choose secure generative AI frameworks, and implement compliance measures. They offer expertise across integration, deployment, and post-launch monitoring, helping businesses align AI use with industry best practices and regulatory expectations.

Q. How often should enterprises audit and update their generative AI security measures?

A. Security audits should be conducted regularly—at least quarterly—or whenever major updates or model retraining occurs. Continuous monitoring of generative AI trends and threat intelligence allows organizations to adapt quickly. Keeping security protocols current is essential for safe innovation and long-term AI sustainability.

Talk With Our Expert

Our Latest Insights

USA

Debut Infotech Global Services LLC

2102 Linden LN, Palatine, IL 60067

+1-708-515-4004

info@debutinfotech.com

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

info@debutinfotech.com

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-708-515-4004

info@debutinfotech.com

INDIA

Debut Infotech Pvt Ltd

Sector 101-A, Plot No: I-42, IT City Rd, JLPL Industrial Area, Mohali, PB 140306

9888402396

info@debutinfotech.com

Leave a Comment