Table of Contents

Home / Blog / Artificial Intelligence

LlamaIndex vs LangChain: Everything You Need to Know

August 28, 2025

August 28, 2025

When businesses and developers step into the world of Large Language Models (LLMs), one of the most pressing comparisons is LlamaIndex vs LangChain. Both tools have emerged as powerful frameworks for building, managing, and optimizing applications powered by advanced LLMs. While LangChain focuses on creating flexible pipelines to connect LLMs with data sources, APIs, and user interfaces, LlamaIndex provides streamlined ways to structure and query data for these models. Understanding their unique strengths, limitations, and how they complement each other is critical for organizations investing in AI-driven applications.

This article provides a detailed breakdown of LangChain vs LlamaIndex, including what each framework is, its core components, use cases, and how they integrate. Along the way, we will highlight where LLM Development Companies, Generative AI Developers, and consulting firms fit into this ecosystem. By the end, you’ll clearly understand how these tools shape LLM applications’ future.

Turn Complex AI Ideas into Reality

Partner with Debut Infotech, a leading Generative AI consulting company, to harness the full power of LLM Models, LlamaIndex, and LangChain for scalable, future-ready solutions.

What is LlamaIndex?

Before comparing the two frameworks, it’s essential to answer the question: What is LlamaIndex?

LlamaIndex is a LLM data framework designed to help developers connect external, domain-specific data to LLMs. Large Language Models are powerful but limited—they are trained on vast datasets, yet they cannot access or understand your organization’s private knowledge. LlamaIndex was designed to solve that problem by acting as a data interface layer.

What does LlamaIndex do?

- Data ingestion: Collects and organizes data from multiple sources such as APIs, databases, or files.

- Indexing: Converts that data into structures that LLMs can quickly search through.

- Retrieval: Fetches only the most relevant pieces of data during a user query, improving accuracy and efficiency.

- Integration: Works seamlessly with popular LLM models from providers like OpenAI, Anthropic, or open-source solutions.

In short, LlamaIndex bridges your data and your LLM, ensuring responses are grounded in the proper context.

Core LlamaIndex Components

To understand its utility, we need to explore LlamaIndex components. These components form the backbone of how the framework operates:

- Data Connectors: These enable the ingestion of unstructured and structured data (PDFs, APIs, databases, web pages, etc.).

- Data Indexing: Structures the data into indexes that LLMs can query efficiently.

- Query Interface: Provides natural language query capabilities, allowing LLMs to fetch relevant data.

- Retrieval Augmented Generation (RAG) Support: Powers retrieval-based systems, improving LLM outputs by grounding them in reliable, real-world information.

- Integration APIs: Ensures the framework works seamlessly with other AI pipelines, including LangChain.

Together, these LlamaIndex components allow businesses to leverage AI without retraining entire LLM models from scratch, reducing AI development costs while maximizing efficiency.

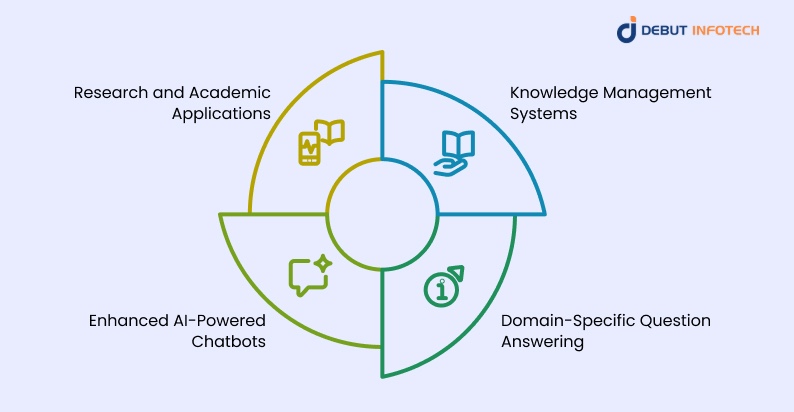

LlamaIndex Use Cases

The utility of LlamaIndex comes from the many ways an organization can deploy it to maximize its data and knowledge base efficacy. Unlike many generalized frameworks, LlamaIndex concentrates most of its effort on ingesting and structuring data. This functionality becomes indispensable when raw, unstructured, or domain-specific datasets are being prepared for LLMs.

Knowledge Management Systems

Organizations with giant repositories of research papers, policy documents, contracts, or scientific data may find it challenging to make this information accessible to their users. LlamaIndex allows the team to build knowledge retrieval systems so that queries can be answered directly from the indexed data rather than relying purely on an external search.

Domain-Specific Question Answering

One of the strongest LlamaIndex use cases lies in industries like healthcare, law, and finance, where models must rely on domain-specific knowledge. By indexing proprietary datasets, organizations ensure that LLMs generate accurate, reliable, and compliant responses rather than speculative ones.

Enhanced AI-Powered Chatbots

When LlamaIndex is integrated with an AI chatbot, it enhances the depth of knowledge it can possess. Instead of being restricted to the training data of a generalized model, chatbots can get their answers straight from updated datasets, such as product manuals, HR policies, customer support documentation, etc.

Research and Academic Applications

LlamaIndex can be the backbone of semantic search, summarization, and intelligent tutoring systems in academic and research institutions. It guarantees that students and researchers are provided with contextually accurate, curated content, far more than a regular search engine.

These LlamaIndex use cases demonstrate why enterprises lean on frameworks like this instead of retraining LLM Models at enormous cost. By leveraging these scenarios, organizations can maximize the value of their internal data while enhancing the reliability of LLM-driven outputs.

Overview of LangChain

LangChain, on the other hand, is a modular framework built to orchestrate LLM-powered applications. It is the “glue” connecting LLMs with tools, APIs, and data sources. Where LlamaIndex excels at structuring and retrieving knowledge, LangChain shines in creating complex workflows and chaining multiple operations together.

For instance, an AI-powered chatbot built on LangChain could query knowledge, interact with APIs, perform calculations, or integrate with business systems. This makes LangChain ideal for more dynamic, multi-step AI applications.

LangChain focuses on:

- Prompt engineering and optimization

- Tool integration (connecting LLMs to APIs, databases, and external software)

- Workflow automation

- Memory components to retain context over more extended conversations

If LlamaIndex is about connecting LLMs to data, LangChain is about orchestrating how an LLM reasons, interacts with tools, and executes tasks.

What does LangChain include?

- Chains: Sequences of calls that structure complex reasoning workflows.

- Agents: AI agents that can decide what action to take next (e.g., querying a database or calling an API).

- Prompts: Templates for structuring instructions to LLMs.

- Integrations: Connects with APIs, search engines, databases, and now, LlamaIndex.

This makes LangChain the backbone for building AI copilots, automation systems, and intelligent agents.

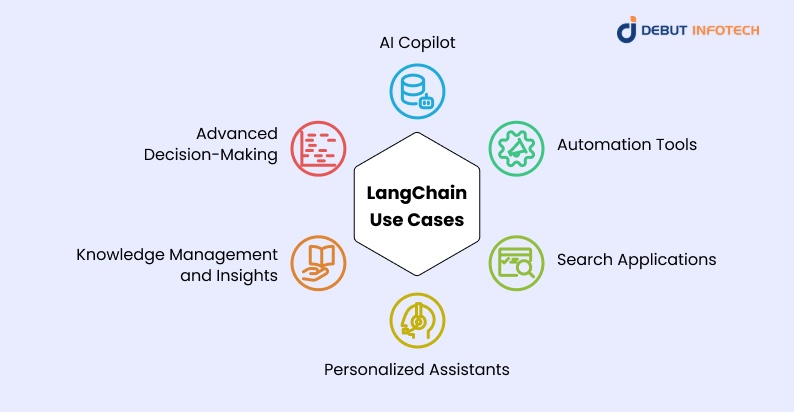

LangChain Use Cases

LangChain’s flexibility makes it one of the most impactful frameworks for building LLM-powered applications across industries. Its ability to chain prompts, integrate external data, and connect with third-party systems makes it a go-to choice for enterprises and startups. Let’s look at some of the most prominent use cases of LangChain and how they add real-world value:

AI Copilot

LangChain is frequently used to build AI copilots—intelligent assistants that support developers, analysts, and decision-makers. In software development, these copilots can automatically suggest code snippets, detect bugs, or even generate test cases by pulling knowledge from repositories and documentation. Beyond engineering, businesses use AI copilots built with LangChain for financial forecasting, market research, and strategic decision-making, essentially acting as an extra “thinking partner” that reduces human error while improving productivity.

Automation Tools

Automation is one of the strongest suits of LangChain. By integrating with systems such as CRMs, ERPs, ticketing platforms, and workflow managers, LangChain enables organizations to automate repetitive tasks. This includes generating customer responses, summarizing support tickets, processing invoices, or routing internal approvals. The advantage lies in its ability to combine retrieval (fetching relevant data) with reasoning (making context-based decisions)—a step beyond traditional robotic process automation (RPA). Companies looking to improve operational efficiency increasingly rely on LangChain-powered automation to save time and reduce costs.

Search Applications

LangChain enhances search experiences by combining retrieval-based search with LLM reasoning abilities. Unlike keyword-based search engines, LangChain allows users to ask questions in natural language and receive contextually accurate results. For example, enterprises can integrate LangChain to search across internal documentation, legal databases, or research repositories with precision. Similarly, in e-commerce, LangChain-based search tools can interpret buyer intent and provide more relevant product recommendations, significantly improving user satisfaction.

Personalized Assistants

Another essential use case is the development of personalized assistants. Businesses increasingly leverage LangChain to build custom virtual assistants tailored to their unique needs. These assistants can be fine-tuned for industry-specific tasks—like a healthcare assistant that provides real-time support to doctors, or a financial assistant that generates client-ready reports. Unlike generic chatbots, LangChain-powered assistants can integrate deeply with organizational knowledge bases and APIs, offering responses and actions aligned with company data, policies, and goals.

Knowledge Management and Insights

LangChain also plays a crucial role in knowledge management systems, where organizations struggle to centralize and make sense of massive unstructured data. By connecting multiple LLMs with databases, LangChain enables businesses to query documents, summarize reports, and extract insights in seconds. This use case has become vital in research-driven industries such as pharmaceuticals, law, and academia, where quick and accurate data access is critical.

Advanced Decision-Making

For finance, logistics, and supply chain industries, LangChain supports real-time decision-making by connecting predictive models with historical and real-time data streams. For instance, a supply chain manager can query an assistant built with LangChain to identify potential bottlenecks, get AI-driven recommendations, and simulate possible solutions—all in one interface.

LlamaIndex vs LangChain: A Direct Comparison

Now that we’ve outlined both frameworks, let’s break down LangChain vs LlamaIndex in terms of their design and goals.

| Aspect | LangChain | LlamaIndex |

| Primary Focus | Workflow orchestration and chaining tasks for LLMs | Data ingestion, indexing, and retrieval optimization |

| Best For | Complex applications with multiple steps and integrations | Domain-specific knowledge retrieval and dataset optimization |

| Core Strength | Multi-agent workflows, integrations, and tools like search APIs | Building retrieval pipelines from structured/unstructured data |

| Use Cases | Virtual assistants, AI copilots, intelligent automation | Knowledge management, chatbots, Q&A systems |

| Integration | Supports custom pipelines with external APIs and databases | Strong connectors for datasets and hybrid retrieval |

| Ease of Use | Requires more setup for beginners | Developer-friendly with a data-first approach |

Ultimately, these frameworks are complementary rather than competitive. When evaluating Llamaindex vs Langchain, organizations should ask: Do they need more control over AI workflows and multi-step automation or a reliable way to index and retrieve internal knowledge? The answer often determines which tool should take priority—or whether both should be used together.

LlamaIndex LangChain Integration

The strongest approach often lies in Llamaindex langchain integration. Developers can integrate LlamaIndex inside LangChain so that agents built in LangChain can query indices created by LlamaIndex.

By integrating the two:

- LlamaIndex provides structured, queryable knowledge sources.

- LangChain orchestrates the workflow, deciding when and how to use LlamaIndex with other tools.

For example:

- A customer support AI agent in LangChain can use LlamaIndex to fetch relevant knowledge base documents.

- A business automation pipeline can use LangChain for orchestration while relying on LlamaIndex to ground responses in accurate data.

This integration enhances accuracy and ensures flexibility and scalability for organizations building enterprise-grade AI applications.

Why Businesses Care About This Debate

For enterprises, the LlamaIndex vs LangChain debate is not about choosing one over the other but how to combine them effectively.

- LLM Development Services: These often use both to deliver robust enterprise-grade solutions.

- Generative AI Development Services: Pair LangChain’s workflow orchestration with LlamaIndex’s data connectivity.

- Generative AI Developers: Gain flexibility to build copilots, chatbots, or automation pipelines.

- Generative AI Integration Services: Ensure smooth alignment of these tools within existing enterprise systems.

- Generative AI consulting company: Helps businesses evaluate which framework or integration pattern fits their strategy.

LLM Models and AI Trends

The rapid adoption of LLM Models has transformed how businesses approach automation, knowledge retrieval, and customer engagement. Yet, the actual value of these models lies not just in their raw power but in the frameworks that make them practical—this is where LlamaIndex and LangChain stand out.

- LLM Models as enterprise drivers: Large Language Models are now at the center of enterprise AI. However, they often generate generic or inaccurate responses without structured ways to connect them to private datasets. LlamaIndex solves this gap by serving as a data framework that enables LLMs to query, index, and reason over proprietary information, ensuring more precise and business-relevant outputs.

- The rise of AI agents: Another clear trend is the growing demand for AI agents—systems capable of carrying out complex workflows without constant human intervention. LangChain plays a key role here as the orchestration layer that allows LLMs to interact with APIs, databases, and external tools. Instead of being static models, they become dynamic problem-solvers.

- Agentic AI vs. AI Agents: This evolution also raises an important distinction: What is Agentic AI vs AI Agents? AI agents typically perform specific tasks, while Agentic AI emphasizes autonomy and adaptive reasoning. LangChain’s design reflects this movement, enabling the construction of multi-step, decision-making systems that resemble human-like intelligence.

- Automation blending with intelligence: The boundary between intelligent automation and AI is disappearing. Companies are no longer satisfied with rigid automation; they want systems that adapt in real time. LlamaIndex and LangChain push this trend forward—merging reliable data handling with flexible orchestration to create more natural, personalized, and efficient automation.

Cost and Development Considerations

Enterprises evaluating AI development services also weigh AI development cost. Using frameworks like LlamaIndex and LangChain can reduce development time by providing pre-built modules.

Partnering with an AI agent development company or exploring AI consulting services can help businesses determine whether they need retrieval-heavy systems, workflow automation, or both.

The next step for those ready to scale is often to hire AI Agent developers with expertise in both frameworks to build custom applications.

Supercharge Your AI Projects with the Right Framework

Whether you choose LlamaIndex for more intelligent data handling or LangChain for seamless orchestration, our Generative AI Developers can help you implement the best solution for your business.

Conclusion

The discussion of LangChain vs LlamaIndex is not about competition but complementary functionality. LlamaIndex excels at indexing and connecting data sources, while LangChain orchestrates how LLMs reason and act. Together, they enable businesses to build robust AI systems—from enterprise chatbots to copilots and intelligent automation.

At Debut Infotech, we believe that the future of LLM applications lies in integration, not isolation. Businesses looking to stay ahead should explore how these tools can work together, supported by strong partnerships with an LLM development company and Generative AI consulting company experts. By leveraging both frameworks, enterprises can build AI systems that are more accurate, efficient, and impactful.

Frequently Asked Questions

Q. What is the main difference between LlamaIndex and LangChain?

A. The most significant difference is in focus. LlamaIndex connects LLMs to external data sources (documents, APIs, databases) and optimizes retrieval pipelines. LangChain, on the other hand, is a broader framework for building end-to-end LLM applications with tools like prompt chaining, agents, and integration with third-party systems.

Q. Can I use LlamaIndex and LangChain together?

A. Yes. Many developers combine both frameworks. LlamaIndex is often used for retrieval-augmented generation (RAG)—feeding external data into the LLM—while LangChain orchestrates workflows, agents, and reasoning tasks. Together, they offer more powerful solutions than either tool alone.

Q. Which is better for building chatbots: LlamaIndex or LangChain?

A. If your chatbot needs to access, retrieve, and summarize external knowledge (like PDFs, SQL databases, or APIs), LlamaIndex is often the better choice. If your chatbot requires complex reasoning, multi-step workflows, or tool integrations (like connecting with CRMs or ticketing systems), then LangChain is more suitable. For many chatbot projects, a hybrid of both works best.

Q. Is LlamaIndex easier to learn than LangChain?

A. Yes, generally. LlamaIndex has a more straightforward setup, especially for RAG pipelines and document-based queries. LangChain offers more flexibility but also has a steeper learning curve since it supports advanced features like agents, prompt chaining, and API orchestration.

Q. Do LlamaIndex and LangChain support multiple LLMs?

A. Yes. Both frameworks are model-agnostic, meaning you can integrate them with different LLMs like OpenAI’s GPT models, Anthropic’s Claude, Meta’s LLaMA, and open-source models like Falcon or Mistral. This flexibility makes them useful across various enterprise and research use cases.

Q. Which industries benefit most from LangChain and LlamaIndex?

1. LlamaIndex is widely used in research, legal, healthcare, and knowledge management, where querying large document sets is essential.

2. LangChain thrives in finance, customer service, logistics, and enterprise automation, where workflows require reasoning and system integration.

2. LangChain thrives in finance, customer service, logistics, and enterprise automation, where workflows require reasoning and system integration.

Q. How do I decide whether to use LlamaIndex or LangChain for my project?

A. The choice depends on your goal:

– Use LlamaIndex if your focus is on retrieval, summarization, and knowledge-based queries.

– Use LangChain if you need multi-step reasoning, tool integration, or building complex AI agents.

For many advanced projects, combining both yields the most effective results.

– Use LlamaIndex if your focus is on retrieval, summarization, and knowledge-based queries.

– Use LangChain if you need multi-step reasoning, tool integration, or building complex AI agents.

For many advanced projects, combining both yields the most effective results.

Talk With Our Expert

Our Latest Insights

USA

Debut Infotech Global Services LLC

2102 Linden LN, Palatine, IL 60067

+1-708-515-4004

info@debutinfotech.com

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

info@debutinfotech.com

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-708-515-4004

info@debutinfotech.com

INDIA

Debut Infotech Pvt Ltd

Sector 101-A, Plot No: I-42, IT City Rd, JLPL Industrial Area, Mohali, PB 140306

9888402396

info@debutinfotech.com

Leave a Comment